Generative Agents: Building a Virtual Smallville with AI Citizens

Introduction: The Virtual World of AI Agents

In April 2023, a groundbreaking paper from Stanford University researchers led by Joon Sung Park introduced a new paradigm in artificial intelligence: generative agents. These computational entities don’t just respond to prompts—they simulate believable human-like behaviors in an open environment, complete with memories, goals, relationships, and the ability to adapt.

The researchers created a simulated town called Smallville (also known as “AI Town”)—a digital sandbox where 25 AI agents live out their daily lives, interact with each other, form relationships, and respond to emerging events. What makes this simulation remarkable is that each agent begins with just a brief backstory (e.g., “John Lin is a friendly pharmacist who lives with his family…”), yet develops into a complex entity with consistent behaviors and evolving social connections.

Unlike characters in traditional simulation games that follow predetermined scripts, these generative agents demonstrate emergent behaviors: they wake up, plan their days, interact through natural language, and adapt to unexpected events—all orchestrated by a large language model (LLM) working behind the scenes.

The Smallville Experiment: A Living Laboratory

The Digital Environment

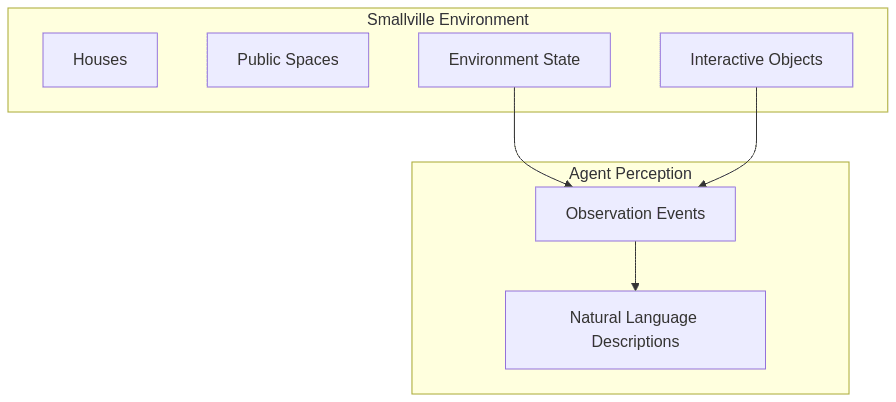

Smallville is designed as a typical small town with:

- Common Spaces: Houses, a cafe, a bar, a park, a grocery store, and a school/dormitory

- Interactive Objects: Functional items like kitchen stoves, desks, beds, and bathrooms that agents can observe and manipulate

- Sprite Avatars: Simple 2D representations that move around the map

- Natural-Language Infrastructure: The entire environment is encoded in natural language descriptions, allowing for dynamic updates through LLM prompts

User Interaction Modes

The simulation allows for multiple forms of interaction:

- Dialogue: Users can chat with agents in natural language as an external persona (e.g., as a reporter asking questions)

- Environmental Manipulation: Users can alter the environment directly (e.g., “<Isabella’s stove> is burning”)

- Agent Guidance: Users can act as an agent’s “inner voice” to influence intentions or thoughts

A Social Experiment in Action

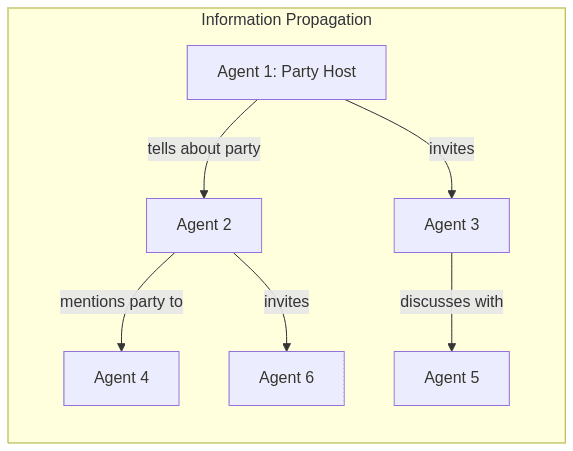

One of the most compelling demonstrations involved a Valentine’s Day party. When researchers suggested to a single agent that it host a Valentine’s celebration, the news spread organically through conversations between agents. Without explicit programming, invitations were extended, and multiple agents coordinated to attend the gathering—showcasing the system’s ability to simulate realistic social dynamics.

The Architecture: How Generative Agents Work

The key innovation in this research isn’t just using LLMs to generate text responses—it’s the specialized architecture designed to maintain coherent agent behavior over extended periods. This architecture addresses a fundamental limitation of large language models: their limited context window, which prevents them from “remembering” all previous experiences.

The researchers developed a five-stage cognitive loop that drives each agent:

- Perceive: Observe the environment and events

- Retrieve: Access relevant memories

- Plan: Create and update schedules

- Act: Generate responses or take actions

- Reflect: Periodically synthesize experiences into higher-level insights

Let’s examine each component in detail:

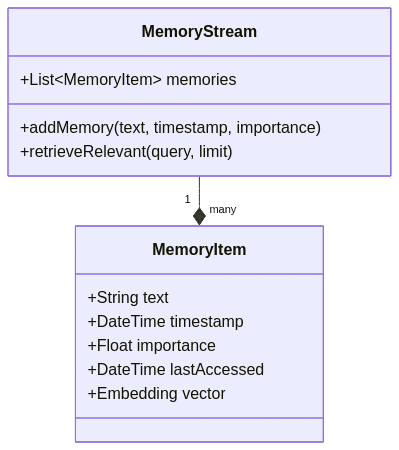

1. Memory Stream: The Foundation of Persistent Identity

“A database to maintain a comprehensive record of an agent’s experience in natural language.”

Unlike traditional chatbots that might only recall the immediate conversation, each generative agent maintains a memory stream—a chronological record of all observations, interactions, and reflections. For example:

"John Lin sees Sam Moore walk by the pharmacy at 9:05am."

"John Lin helps a customer find cold medicine at 9:20am."

"John Lin thinks that business has been slower than usual this week."This comprehensive record allows agents to reference past experiences when making decisions. However, simply storing memories isn’t enough—the system needs a way to retrieve only the most relevant ones.

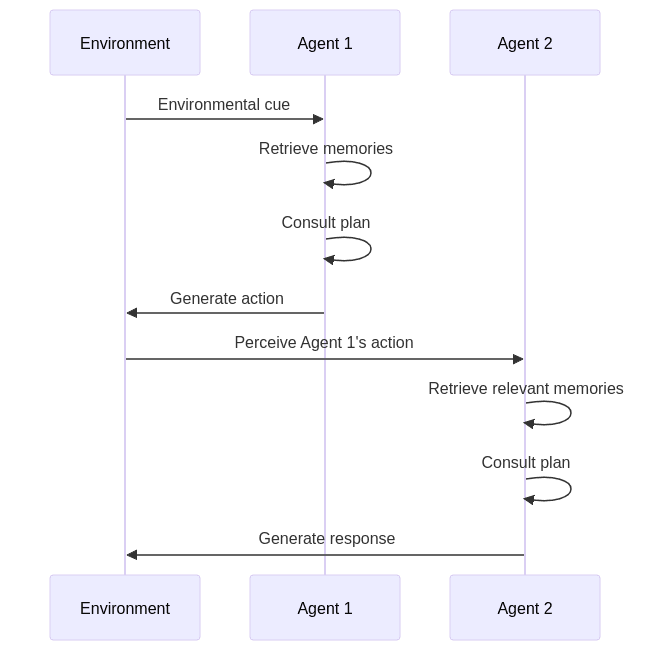

2. Memory Retrieval: Finding What Matters

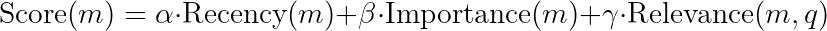

Since an agent can’t process its entire memory stream at once (due to LLM context limitations), the system employs a sophisticated retrieval mechanism that scores memories based on three factors:

- Recency: Recent memories receive higher priority

- Importance: Each memory is assigned an importance score by the LLM (e.g., “family tragedy” might score 9/10, while “bought milk” might score 2/10)

- Relevance: Semantic similarity between current situation and stored memories (using embedding distance)

The final retrieval score determines which memories get included in the context provided to the LLM for decision-making. This selective memory access ensures that agents respond with the most pertinent information from their history.

The memory scoring formula can be represented as:

Where: –  (exponential decay based on time elapsed) –

(exponential decay based on time elapsed) – ![LaTeX: \text{Importance}(m) \in [0,1]](https://n-shot.com/wp-content/uploads/attachments/research-2-generative-agents-smallville_math_inline_math_1.png) (normalized importance score) –

(normalized importance score) –  (cosine similarity between memory and query embeddings) –

(cosine similarity between memory and query embeddings) –  are weighting parameters

are weighting parameters

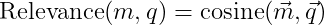

3. Reflection: Building Higher-Level Understanding

“Agents, when equipped only with raw observational data, struggle to generalize or make inferences.”

Periodic reflection allows agents to synthesize raw experiences into meaningful insights. The system prompts the LLM to review recent memories and generate abstract conclusions like:

“Klaus Mueller is particularly dedicated to his gentrification research.” “I enjoy organizing social gatherings and bringing people together.”

These reflections are stored back into the memory stream, enabling agents to develop stable personalities, opinions, and relationship perceptions over time. This capability is crucial for coherent long-term behavior—an agent that has reflected on enjoying social activities is more likely to initiate gatherings consistently.

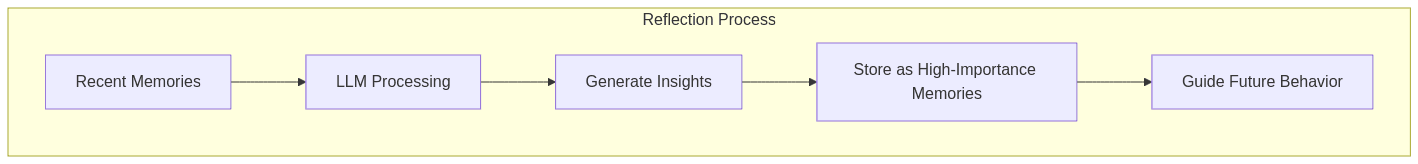

4. Planning: Maintaining Temporal Coherence

“Plans help agents create daily schedules and maintain coherence over long horizons.”

While LLMs excel at generating plausible moment-to-moment responses, they struggle with long-term consistency. The planning module addresses this by explicitly prompting the LLM to create structured day plans:

1) 7:00–7:30 AM: Wake up, do morning routine

2) 7:30–8:00 AM: Eat breakfast

3) 8:00–9:00 AM: Go to The Willow Market and Pharmacy to open the shop

...These plans serve as anchors for coherent behavior. At each time step, agents can break high-level plans into specific actions while remaining flexible enough to handle unexpected events (e.g., replanning if the stove catches fire). This planning mechanism ensures that agents maintain consistent goals—an agent who agreed to attend a 5 PM Valentine’s party won’t spontaneously schedule dinner elsewhere at that time.

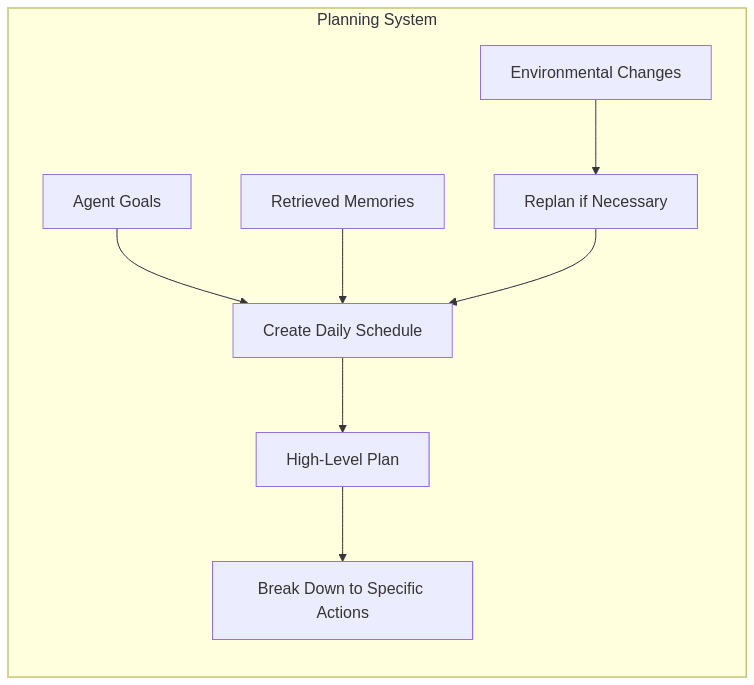

5. Action Generation: Responding to the World

When an agent needs to act or respond, the system:

- Perceives the current environment

- Retrieves relevant memories using the scoring system

- Consults its current plan

- Generates an appropriate action or dialogue response

For multi-agent conversations, one agent’s statement becomes an event that the other agent perceives, prompting memory retrieval and a contextually appropriate response. This creates naturalistic dialogue that reflects each agent’s unique knowledge, goals, and personality.

Emergent Social Dynamics: What Researchers Discovered

After implementing the 25-agent Smallville scenario, the researchers observed several compelling emergent social behaviors:

1. Information Spread

News about Sam Moore’s mayoral candidacy spread organically through the community. Initially, only Sam knew about his candidacy, but by the second simulation day, approximately 8 agents had learned about it through natural conversations. This demonstrated how information diffuses through a social network without centralized control.

2. Relationship Formation

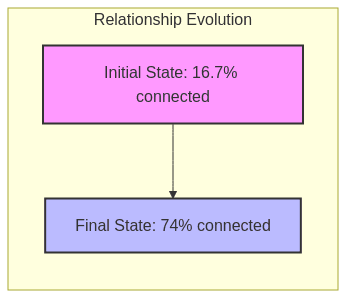

Agents who had never previously interacted began to form connections based on proximity, shared interests, or mutual acquaintances. The “network density” (the fraction of agent pairs that recognized each other) increased from 0.167 to 0.74 after just two days of simulation. Some agents developed stronger affinities based on compatible personalities or shared experiences.

3. Social Coordination

The Valentine’s Day party example highlighted complex multi-agent coordination. Isabella Rodriguez decided to host a party, invited friends, who then informed others. Eventually, five guests attended, while others missed it due to conflicting schedules or simply forgetting (failing at the memory retrieval step). This nuanced outcome—not everyone attending despite being invited—mirrors realistic social dynamics.

Importance of the Architecture Components

To validate their approach, the researchers conducted “interviews” with agents and found that memory, reflection, and planning each significantly contributed to more believable outputs:

- Agents with full architecture gave responses that referenced relevant past experiences, demonstrated consistent personality, and maintained coherent schedules

- Ablating any component led to noticeably degraded performance—agents without reflection struggled to form stable opinions, while those without planning often seemed disorganized

Challenges and Limitations

Despite the impressive results, the current generative agent architecture faces several challenges:

Memory and Retrieval Limitations

- The memory stream grows continuously, creating challenges for efficient storage and retrieval

- Poor retrieval can cause agents to ignore crucial experiences or fixate on irrelevant information

- Embedding-based retrieval sometimes misses contextually important but semantically dissimilar memories

Reflection Quality Issues

- Without effective reflection, agents remain reactive without developing stable generalizations

- Reflections can become contradictory over time if the environment changes dramatically

- The quality of reflections depends heavily on the underlying LLM’s reasoning capabilities

Planning Constraints

- LLMs still struggle with complex multi-step reasoning, leading to occasional scheduling inconsistencies

- Long-horizon tasks remain challenging, especially when agents must adhere to environmental constraints

- Agents sometimes struggle to adapt plans appropriately when faced with unexpected obstacles

Behavioral Authenticity

The researchers noted that LLM instruction tuning sometimes produces overly formal, polite, or cooperative dialogue—less realistic than actual human interaction. Agents rarely engage in strong disagreements or conflicts, limiting the simulation of complex social dynamics that involve tension or competition.

Implementation: A Code Glimpse

Below is a conceptual Python code sketch illustrating how one might implement the memory and retrieval system for a generative agent:

import time

import numpy as np

from typing import List, Tuple

from langchain import EmbeddingModel, LLM

embedding_model = EmbeddingModel("text-embedding-model")

llm = LLM("gpt-3.5-turbo") # or an equivalent

def cosine_similarity(v1, v2):

"""Calculate cosine similarity between two vectors"""

dot_product = np.dot(v1, v2)

norm_v1 = np.linalg.norm(v1)

norm_v2 = np.linalg.norm(v2)

return dot_product / (norm_v1 * norm_v2)

class MemoryItem:

def __init__(self, text: str, timestamp: float, importance: float = 1.0):

self.text = text

self.timestamp = timestamp

self.importance = importance

self.last_access = timestamp

# Cache the embedding to avoid recomputing

self.embedding = None

def get_embedding(self):

"""Lazy load the embedding when needed"""

if self.embedding is None:

self.embedding = embedding_model.embed_text(self.text)

return self.embedding

class Agent:

def __init__(self, name: str, initial_memories: List[str]):

self.name = name

# A list of MemoryItem

self.memory_stream = []

# Weights for the memory retrieval scoring function

self.recency_weight = 0.3

self.importance_weight = 0.3

self.relevance_weight = 0.4

# Populate initial memories with current time

for mem_text in initial_memories:

mem_item = MemoryItem(

text=mem_text,

timestamp=time.time(),

importance=self.get_importance(mem_text)

)

self.memory_stream.append(mem_item)

def get_importance(self, text: str) -> float:

"""Ask the LLM to rate the importance of a memory"""

prompt = f"Rate the significance of this memory for {self.name} on a scale 1-10:\nMemory: {text}"

response = llm(prompt)

# parse integer from response

try:

score = float(int(response.strip())) / 10.0

return score

except:

return 0.1 # Default low importance if parsing fails

def remember(self, new_observation: str) -> None:

"""Add a new memory to the memory stream"""

now = time.time()

importance = self.get_importance(new_observation)

item = MemoryItem(new_observation, now, importance)

self.memory_stream.append(item)

def retrieve_relevant_memories(self, query: str, limit: int = 5) -> List[MemoryItem]:

"""Retrieve memories relevant to the current query"""

# 1) compute embedding of query

query_emb = embedding_model.embed_text(query)

# 2) score each memory

memory_scores: List[Tuple[float, MemoryItem]] = []

now = time.time()

for mem in self.memory_stream:

# recency factor (exponential decay)

hours_elapsed = (now - mem.last_access) / 3600.0

recency_score = np.exp(-0.005 * hours_elapsed) # λ=0.005

# importance score (already normalized to [0,1])

imp_score = mem.importance

# semantic relevance (cosine similarity)

mem_emb = mem.get_embedding()

relevance_score = cosine_similarity(query_emb, mem_emb)

# Weighted sum of factors

final_score = (

self.recency_weight * recency_score +

self.importance_weight * imp_score +

self.relevance_weight * relevance_score

)

memory_scores.append((final_score, mem))

# sort by final_score desc

memory_scores.sort(key=lambda x: x[0], reverse=True)

# pick top N memories that fit context window

top_memories = [m[1] for m in memory_scores[:limit]]

# Update last_access time for retrieved memories

for mem in top_memories:

mem.last_access = now

return top_memories

def reflect(self, recent_count: int = 10) -> None:

"""

Form higher-level insights from recent memories and add them as new memories.

"""

if len(self.memory_stream) < recent_count:

return # Not enough memories to reflect on

# Get the most recent n memories

recent_memories = sorted(

self.memory_stream,

key=lambda x: x.timestamp,

reverse=True

)[:recent_count]

# Combine the texts

memory_texts = [f"- {mem.text}" for mem in recent_memories]

memory_context = "\n".join(memory_texts)

# Ask LLM to generate reflections

prompt = f"""

Based on these recent memories of {self.name}:

{memory_context}

Generate 1-3 high-level insights or reflections that {self.name} might form.

Each reflection should be a single sentence that captures a pattern, conclusion, or realization.

"""

reflections = llm(prompt).strip().split("\n")

# Add reflections as new high-importance memories

now = time.time()

for reflection in reflections:

if reflection: # Skip empty lines

ref_text = f"{self.name} reflected: {reflection}"

# Reflections are typically more important

self.memory_stream.append(

MemoryItem(ref_text, now, importance=0.8)

)

def generate_plan(self, day_start: float, day_end: float) -> List[str]:

"""

Generate a daily plan based on agent's memories and goals.

Returns a list of planned activities with time slots.

"""

# Implementation omitted for brevity

pass

# Example usage

agent = Agent(

name="John Lin",

initial_memories=[

"John Lin is a friendly pharmacist.",

"John Lin is married to Mei Lin, a professor.",

"John Lin lives in Smallville."

]

)

# Suppose John sees Sam Moore at the park

agent.remember("John Lin saw Sam Moore at the park and greeted him.")

top_mem = agent.retrieve_relevant_memories("Sam Moore")

print("Relevant memories about Sam Moore:")

for m in top_mem:

print("-", m.text)

# Periodically trigger reflection

if len(agent.memory_stream) % 10 == 0:

agent.reflect()Applications and Future Directions

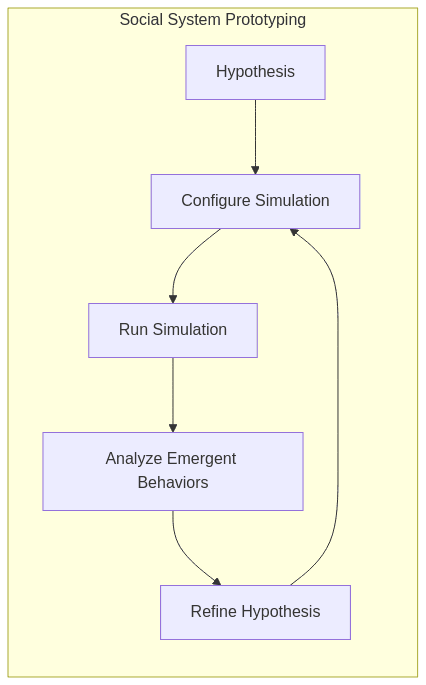

Simulation for Social System Prototyping

Generative agents offer a powerful tool for prototyping social interactions and systems without requiring human participants for every iteration. Researchers and designers could:

- Test how changes to environment parameters (store opening hours, spatial layouts) affect social dynamics

- Experiment with different agent personality distributions to observe emerging group behaviors

- Prototype game mechanics or social features before expensive human playtesting

Enhanced Agent Cognition

Future research could address current limitations through:

- Hierarchical memory systems that better organize and compress long-term knowledge

- Meta-cognitive frameworks that allow agents to reason about their own reasoning processes

- Improved planning algorithms that handle complex constraints and contingencies

- Chain-of-thought prompting or similar techniques to enhance logical consistency

Grounding and Reality Constraints

To reduce hallucinations and enforce consistency:

- Knowledge graph integration could constrain agent beliefs to verified facts

- Physical simulation layers could enforce realistic constraints on agent movements and actions

- Fact-checking mechanisms could verify generated content against established information

Ethical Considerations and Responsible Development

As generative agents become more sophisticated, several ethical considerations emerge:

- Preventing parasocial attachment: Clear disclosure of artificial nature to prevent unhealthy emotional dependence

- Comprehensive audit logs: Monitoring agent behaviors and interactions to prevent harmful emergent patterns

- Human oversight: Maintaining human review of agent development, especially in sensitive applications

- Representation and bias: Ensuring diverse agent populations that don’t reinforce stereotypes

Conclusion: The Future of Digital Simulacra

The Generative Agents research by Park and colleagues represents a significant advancement in creating believable artificial entities with persistent identities, memories, and social awareness. Unlike previous approaches that relied on scripted behaviors or simple response patterns, this architecture enables emergent complexity through the interplay of memory, reflection, planning, and action.

While current implementations face limitations in reasoning ability, memory management, and behavioral authenticity, the core architectural principles provide a robust foundation for future development. As language models become more capable and efficient, we can expect generative agents to demonstrate increasingly sophisticated cognition, deeper interpersonal dynamics, and more authentic human-like behaviors.

The implications extend far beyond games or entertainment. These technologies could revolutionize fields ranging from education (personalized learning companions) to healthcare (patient simulation for medical training) to urban planning (modeling community responses to proposed changes).

At their core, generative agents represent a step toward creating artificial entities that don’t just respond to immediate prompts but maintain coherent identities over time—entities that remember, learn, plan, and adapt in ways that increasingly mirror human cognitive processes. While still far from true artificial general intelligence, they offer a fascinating glimpse into how language models can be structured to create the illusion of persistent consciousness and social awareness.

As this technology evolves, it will continue to challenge our understanding of intelligence, agency, and the boundaries between simulated and authentic interaction—questions that will become increasingly relevant in our AI-augmented future.