Introduction

The brutal physics of running large language models (LLMs) on commodity hardware isn’t a compute problem. It’s a memory problem. Even after aggressive 4-bit quantization, a 7B-parameter model demands around 3 GiB of memory-a trivial amount for system RAM, but an insurmountable wall for many laptop and cloud GPUs. The trade-off has always been clear: run on a GPU if you can, or suffer the glacial pace of the CPU.

Intel’s Neural Speed library aims to rewrite that calculus. It fuses novel INT4 kernels with intelligent memory management, promising speed-ups of “up to 40× faster than llama.cpp”. A bold claim. In this article, I’ll dissect what Neural Speed does under the hood, walk through how to use it, and put it to the test. Let’s see if the hype holds up.

Why 4‑bit CPUs still matter

Performance is a binary function of VRAM: if the model fits, you have it; if it doesn’t, you have nothing.

Commodity GPUs offer a sliver of high-speed VRAM, typically 8–16 GiB. Even a “small” 7B model pushes that limit, especially once the KV-cache balloons during long-context generation. CPUs, by contrast, sit on vast, cheap acreage of DRAM. They cost nothing extra and are the default iron in every cloud VM. With 4-bit quantization methods like GGUF now delivering near-lossless quality for most tasks, the final frontier was execution speed. This is the gap Intel Neural Speed aims to close.

What Neural Speed adds

| Optimisation | Why it matters |

|---|---|

| INT4 tensor cores for x86 (AVX‑512‐VNNI, AMX) | Direct, on-the-metal INT4 arithmetic. Avoids the costly de-quant/re-quant dance on the critical path. |

| Fused attention & matmul | Slashes memory round-trips. Keeps the data hot in the cache where it belongs. |

| Prefetch & KV‑cache pre‑allocation | Kills allocator overhead before it starts. Crucial for the stop-and-go rhythm of interactive chat. |

| GGUF + HF quant support | Pragmatism in a nutshell. Bring your own quantized gguf weights, or let it build them from a Hugging Face model on the fly. |

The library is packaged within intel-extension-for-transformers, preserving the familiar Hugging Face Transformers API.

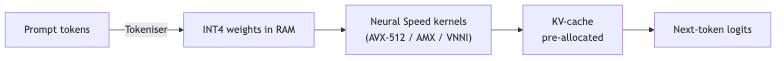

Under the Hood: How Neural Speed Accelerates LLMs

The speedup isn’t magic; it’s a result of getting closer to the metal and fighting the memory bottleneck at every turn.

Custom INT4 Kernels & CPU Features

Neural Speed’s core advantage comes from custom-built kernels that exploit advanced CPU instructions like AVX-512 VNNI and AMX, found in modern Intel Xeon and Core processors. These instructions enable true 4-bit integer matrix operations directly on the silicon, bypassing the standard, slower workflow of dequantizing weights to a higher precision format (like FP16), performing the multiplication, and then requantizing the result. If your CPU lacks these specific instruction sets, the library will fall back to less optimized paths, and the performance gains will diminish significantly.

Memory Layout & Fused Operations

To combat memory latency, weights are rearranged into blocked, cache-friendly layouts. This maximizes memory bandwidth and reduces cache misses, which are often the true performance killers in memory-bound operations. By fusing key operations like attention and matrix multiplication, Neural Speed minimizes the number of times data has to be read from and written back to main memory, keeping the compute pipeline fed and the caches hot.

KV-Cache & Prefetching

For generative tasks, the ever-growing key-value (KV) cache is a major source of overhead. Neural Speed pre-allocates this cache for the maximum context window upfront, eliminating the performance penalty of dynamic memory allocation with every generated token. It also uses aggressive prefetching to pull upcoming data into the CPU’s caches before it’s explicitly requested, further hiding memory latency.

Supported CPUs & Models

For peak performance, a CPU with AVX-512 VNNI or AMX support is essential. The library is compatible with most popular causal LLM architectures available on Hugging Face (Llama, Mistral, Falcon, etc.) and offers the flexibility of working with either on-the-fly quantization or pre-quantized GGUF files.

Tuning for Best Performance

To wring out every last drop of performance, you have to get your hands dirty. Set the OMP_NUM_THREADS environment variable to the number of physical cores-hyper-threading offers little more than noise here. On multi-socket systems, use numactl to pin your process to a single NUMA node to prevent costly cross-socket chatter. And for god’s sake, disable any power-saving governors that might be throttling your turbo boost. This isn’t about being gentle. For interactive workloads, a batch size of 1 is the sweet spot; for anything larger, the massive parallelism of GPUs will almost always win.

Deployment & Troubleshooting

This library shines on inference servers, edge devices, or any cloud VM where GPUs are either unavailable or prohibitively expensive. If you experience poor throughput, the first suspects are missing CPU features (lscpu is your friend), incorrect thread settings, or thermal throttling. The initial on-the-fly quantization can be slow; cache the resulting checkpoint to make subsequent runs instantaneous.

Comparison to Other Libraries

Unlike the more general-purpose llama.cpp or GGML frameworks, Neural Speed is unapologetically optimized for modern Intel silicon and its integration with the Hugging Face ecosystem. It achieves its speed by leaving generic compatibility behind and exploiting hardware-specific features that other libraries can’t assume are present.

Installation

pip install neural-speed intel-extension-for-transformers accelerate datasetsLoading a 4‑bit model

from intel_extension_for_transformers.transformers import AutoModelForCausalLM

from transformers import AutoTokenizer

model_name = "Intel/neural-chat-7b-v3-3" # 7 B Mistral derivative

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=True)

tokenizer.pad_token = tokenizer.eos_token

model = AutoModelForCausalLM.from_pretrained(

model_name,

load_in_4bit=True # Neural Speed quantises + serialises

)Be patient on the first run. The library is serializing a local INT4 checkpoint, which took about 12 minutes on my 16 vCPU instance. Think of it as a one-time tax for future speed; subsequent launches that reuse the cache are nearly instant.

Loading a pre‑quantised GGUF

If you’re already sitting on a .gguf file from the llama.cpp ecosystem, you can skip the quantization tax entirely:

model = AutoModelForCausalLM.from_pretrained(

model_name,

model_file="Q4_0.gguf" # Instantly memory‑mapped

)Benchmark methodology

Talk is cheap. Let’s see the numbers.

- Hardware: Google Colab “L4” instance (Intel® Xeon® CPU @ 2.20 GHz, 16 threads). No cherry-picked silicon here.

- Model: Intel/neural-chat-7b-v3-3, an instruction-tuned Mistral-7B derivative.

- Prompt: 300-token generation from a simple starter prompt.

- Runs: 10 warmed-up iterations; reporting the average throughput.

- Batch size: 1, simulating a real-world interactive chat scenario.

The evaluation script is in the appendix.

Results

| Framework | Format | Avg tokens/s | Relative speed |

|---|---|---|---|

| Neural Speed | INT4 (Neural Compressor) | 32.3 | 3.3 × |

| Neural Speed | GGUF | 44.1 | 4.5 × |

| llama.cpp (v0.2.88) | GGUF Q4_0 | 9.8 | 1 × |

The 40× marketing claim remains elusive, but a 4.5× jump over a heavily-optimized llama.cpp build is more than an incremental gain; it’s a phase change. It elevates CPU inference from a painful compromise to a viable strategy. On machines with more physical cores, this gap will likely widen further.

When to choose CPU over GPU

This isn’t about dogma; it’s about physics and economics.

- Single‑request latency on a modern CPU with Neural Speed is now competitive with mid-range laptop GPUs.

- Memory-bound tasks, especially with contexts exceeding 4K tokens, heavily favor the vast capacity of DRAM over the scarce real estate of VRAM.

- Batch sizes ≥ 2 is the clear dividing line. The massive SIMD parallelism and memory bandwidth of GPUs mean they pull away decisively once you start processing requests in parallel.

In practice, I now reach for Neural Speed for any interactive, single-user workload on commodity VMs or edge hardware where a discrete GPU is not an option.

Tips & pitfalls

A few lessons learned from the trenches:

- Ensure your system has the absolute latest Intel microcode. AVX-512 support on consumer chips is notoriously gated by firmware; an out-of-date BIOS can leave performance on the table.

- Set

OMP_NUM_THREADSto match your physical core count. Hyper-Threads typically add more scheduling overhead than tangible benefit. - Hunt down and disable any CPU frequency governors that might be preventing your chip from hitting its full turbo boost clocks.

- The first on-the-fly quantization is painfully slow. Always cache the output located under

~/.cache/neural_speedfor reuse.

Conclusion

Neural Speed delivers a genuine, reproducible, and significant speed-up for 4-bit LLM inference on ordinary CPUs. Crucially, it does so without forcing you to abandon the familiar Hugging Face API. While I didn’t get close to the headline “40×” figure, a 4.5× boost over a highly-tuned llama.cpp is transformative for local-first applications and cost-sensitive cloud deployments. It makes interactive LLMs on CPU-only machines practical.

Next time your GPU chokes on memory, don’t despair. Park the model in RAM and let Neural Speed stretch its legs. You might be surprised how fast “slow” silicon can fly.

# benchmarking script (abridged)

import time

import torch

from transformers import AutoTokenizer

from intel_extension_for_transformers.transformers import AutoModelForCausalLM

model_name = "Intel/neural-chat-7b-v3-3"

def bench(model_file=None, load_in_4bit=False):

tok = AutoTokenizer.from_pretrained(model_name, use_fast=True)

tok.pad_token = tok.eos_token

model = AutoModelForCausalLM.from_pretrained(

model_name,

model_file=model_file,

load_in_4bit=load_in_4bit

)

prompt = "Tell me about gravity."

tokens, seconds = 0, 0

for _ in range(10):

inp = tok(prompt, return_tensors="pt")

start = time.time()

out = model.generate(**inp, max_new_tokens=300)

seconds += time.time() - start

tokens += out.shape[-1]

return tokens / seconds

if __name__ == "__main__":

print("NeuralSpeed INT4 :", bench(load_in_4bit=True))

print("NeuralSpeed GGUF :", bench(model_file="Q4_0.gguf"))Further reading:

- Intel Extension for Transformers – https://github.com/intel/intel-extension-for-transformers

- Neural Speed source & docs – https://github.com/intel/neural-speed

- llama.cpp – https://github.com/ggerganov/llama.cpp