The Artisanal Trap

We find ourselves in an era of digital artisanship, meticulously hand-crafting agentic workflows. Like digital watchmakers, we wire together components, tweak prompts, and define rigid chains of command. This process is brittle, time-consuming, and profoundly limited by our own architectural imagination. We design these intricate little chains, these Rube Goldberg machines of logic, hoping they approximate the required intelligence, all while feeling the gnawing suspicion that a far more optimal configuration exists just beyond our cognitive grasp.

The recent work on Self-Evolving Agentic Workflows (SEW) offers a different path, one grounded in a more fundamental principle: evolution. It posits that the language model itself, given the right framework, can transcend its role as a mere component and become the architect of its own operational structure. It suggests a move away from static construction and towards dynamic cultivation, letting the system discover its own optimal form through trial, error, and selection.

Mechanism of Adaptation

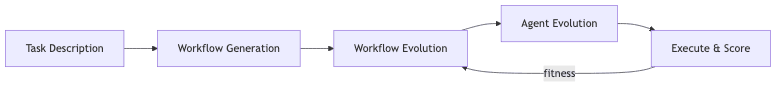

The core idea is to let an LLM search the design space of both the workflow topology and the agent-specific instructions. The process is a dual-layered evolutionary loop, where the system refines itself at both the macro and micro levels.

The cycle is initiated with a simple task description and a template. From this seed, the LLM generates an initial workflow. Then, the evolutionary pressures begin:

- Workflow Evolution: The macro-structure is mutated. Using a set of mutation prompts, the system explores different topologies-adding or removing agents, re-wiring connections, and altering the flow of information.

- Agent Evolution: For each agent within a given workflow, the micro-details are refined. The prompts that define each agent’s “personality” and function are themselves evolved to better fit the task.

- Evaluation & Selection: The candidate workflow is executed, its output is scored for fitness, and the most successful variants are retained to parent the next generation.

The elegance of the approach lies in its self-containment. The same foundational model serves as architect, mutator, and executor-a closed loop of cognitive self-improvement that requires no external optimization machinery.

First and Second-Order Evolution

The paper distinguishes between two modes of agent-level evolution:

- Direct Evolution (DE): The agent’s prompt is directly mutated.

- Hyper Evolution (HE): The prompt that mutates the prompt is itself mutated. This second-order search proved more stable, a fascinating insight into the dynamics of guided textual evolution. It’s not about changing the solution; it’s about “learning” how to change the solution more effectively.

A Quick Tour of the EvoAgentX Repository

The open‑source implementation lives at the link above and is refreshingly easy to navigate:

evoagentx/agents/andevoagentx/workflow/– primitive agent and workflow classes.evoagentx/prompts/– a library of mutation, hyper‑mutation, and thinking‑style prompts.evoagentx/benchmark/– scripts for HumanEval, MBPP, and the gargantuan LiveCodeBench.examples/– step‑by‑step walkthroughs (I recommend starting here).

Spin it up with:

pip install -e .

python examples/run_sew.py --task "Write a palindrome checker"Expect it to spawn a lot of LLM calls – evolution isn’t cheap.

Empirical Footprints

The true test of any architectural philosophy is its empirical performance. Here, the results are compelling.

| Dataset | Backbone (GPT‑4o‑mini) | + SEW | Improvement |

|---|---|---|---|

| HumanEval | 80.2 % | 92.1 % | +11.9 pp |

| MBPP | 63.4 % | 84.1 % | +20.7 pp |

| LiveCodeBench | 38.0 % | 50.9 % | +12.9 pp |

pass@1 metric, from the paper’s Table 1

The data tells a clear story. Autonomous evolution consistently outperforms a static, albeit powerful, baseline model. A few points are worth noting:

- The gains are most pronounced on MBPP, a dataset of more basic programming tasks. This suggests that for simpler problems, the space of possible solutions is vast and human-designed workflows are more likely to be stuck in a local optimum. Evolution has more room to explore.

- The co-evolution of workflows and agents is critical. Experiments that only evolved the workflow topology saw significantly smaller gains, reinforcing the idea that structure and function must adapt in concert.

- The team’s study of workflow representations found that CoRE (Code-oriented Representation) offered the best balance of human readability and successful generation by the LLM, a pragmatic piece of guidance for anyone building similar systems.

For those inclined to experiment, the team has open-sourced their work as the EvoAgentX project, a welcome move towards transparency and reproducibility.

Capabilities and Their Costs

The promise of a fully autonomous system that engineers its own solutions is immense. Handing a system a high-level task and having it return not just an answer, but a bespoke, optimized workflow for deriving that answer, is a significant leap.

But evolution, in silicon as in nature, is not without its costs.

- Computational Expense: The search process is computationally voracious. A single run on a complex benchmark can burn through millions of tokens, a stark reminder that the fitness landscape is vast and traversing it carries a price.

- Execution Fragility: Not all evolutionary paths lead to success. The paper notes that some evolved workflows become unstable, terminating on a “reviewer” agent and thus failing to produce a final, usable output. The search is not guaranteed to converge on a robust solution.

- Generalization: The focus here is on code generation. Whether this evolutionary model can generalize to more abstract reasoning, planning, or multimodal tasks remains an open and critical question.

The Primacy of the Process

What SEW demonstrates is a fundamental shift in how we might approach complex problem-solving with AI. It moves the human operator up a level of abstraction-away from being the one who writes the prompts and towards being the one who defines the selection pressures. Our role shifts from that of a micro-manager to that of a governor of an evolutionary process.

The performance gains are real and the methodology is sound. While the costs are currently non-trivial, the trajectory is clear. The future of complex agentic systems may lie not in ever more intricate human designs, but in creating the conditions for those systems to discover and refine themselves. We aren’t just building machines; we are cultivating digital ecosystems that learn how to learn.