Key Takeaways

- Bidirectional reasoning boosts accuracy: Injecting reverse thinking during training lifts a 7 B parameter model by ~13 % on average across 12 reasoning benchmarks.

- Efficient at test‑time: The student still performs a single forward pass-back‑reasoning is only learned, not executed, at inference.

- Sample‑efficient: With just 10 % of the training data, REVTHINK outperforms a standard fine‑tune that uses the full dataset.

- Scales and generalises: Gains persist from 2 B to 8 × 22 B models and translate to out‑of‑distribution tasks (e.g. BoolQ, GSM8K‑Reversal).

Introduction

Large Language Models (LLMs) have become uncanny pattern-completion engines, yet their grasp on multi-step reasoning remains brittle. A purely forward-pass inference is a fragile thing, a chain of logic susceptible to a single, silent, catastrophic failure along the way. Humans rarely operate this way. We sanity-check our conclusions, often by working backwards from the answer to the premises.

This cognitive tic-this habit of inversion-is the inspiration behind the recent paper “Reverse Thinking Makes LLMs Stronger Reasoners” from Google Research and UNC. Their approach, REVTHINK, teaches a student model to internalize this bidirectional reasoning, embedding the discipline of a logical round-trip into the weights themselves. The result is a stronger reasoner that remains just as cheap to run at inference time.

Here, I’ll unpack the core idea, walk through its implementation, and consider where reverse-enhanced training fits in the practitioner’s toolbox.

Why Reverse Thinking?

“Invert, always invert.” – Carl Jacobi

Forward reasoning (Q → A) is an open loop. Reverse reasoning (A → Q) closes it, acting as an immediate consistency check.

Question : Emma has 2 apples, Jack has 3. How many altogether?

Forward : 2 + 3 = 5 ✅

Backward : Assume total is 5. If Emma has 2, Jack must have 3 ✅ (matches premise)If the forward chain had yielded 6, the backward check would instantly reveal the contradiction. The core insight of REVTHINK is to bake this duality into the model’s architecture through a teacher-student distillation process, rather than bearing the cost of running two chains at inference.

The REVTHINK Pipeline

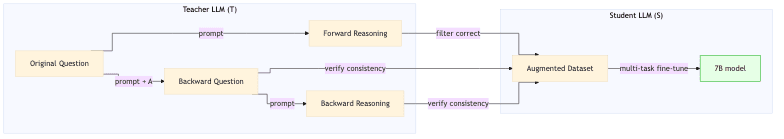

1. Data Augmentation via the Teacher

For each (Q, A) training pair, the process forks:

- A forward reasoning chain

R_fis generated. - A backward question

Q_bis formulated to invert the task. - A backward reasoning chain

R_bis generated to answerQ_b.

The signal is sharpened by a brutal filtering step: only instances where R_f is correct and R_b is consistent with the original question survive to become part of the augmented dataset.

2. Multi-Task Objectives

During fine-tuning, the student minimizes a composite loss across three tasks:

This multi-task objective forces the model to internalize the full logical loop, structuring its latent space not just to find answers, but to understand their provenance.

Minimal Implementation Walk-Through

The implementation is surprisingly direct. Below is a skeletal view using PyTorch and HuggingFace Transformers that mirrors the authors’ LoRA setup. It presumes an augmented.jsonl file has been produced with the keys question, forward_reasoning, backward_question, and backward_reasoning.

from peft import LoraConfig, get_peft_model

from transformers import AutoModelForCausalLM, AutoTokenizer, TrainingArguments, Trainer

import json, torch

MODEL_NAME = "mistralai/Mistral-7B-Instruct-v0.3" # or "google/gemma-7b-it"

tok = AutoTokenizer.from_pretrained(MODEL_NAME, use_fast=True)

model = AutoModelForCausalLM.from_pretrained(MODEL_NAME, torch_dtype=torch.bfloat16, device_map='auto')

lora_cfg = LoraConfig(r=32, target_modules=["q_proj","v_proj"], lora_dropout=0.05)

model = get_peft_model(model, lora_cfg)

# --- dataset ---------------------------------------------------------------

def make_example(record):

prompt = f"""### Question:\n{record['question']}\n### Forward Reasoning:\n"""

target = record['forward_reasoning']

# We concatenate the two auxiliary tasks, separated by special tokens

prompt += "<aux1>\n" + record['backward_question'] + "\n<aux2>\n"

target += "\n" + record['backward_reasoning']

ids = tok(prompt, return_tensors='pt').input_ids[0]

labels = tok(target, return_tensors='pt').input_ids[0]

return {"input_ids": ids, "labels": labels}

train_data = [make_example(json.loads(l)) for l in open("augmented.jsonl")]

# --- training -------------------------------------------------------------

targs = TrainingArguments(

output_dir="revthink_lora",

per_device_train_batch_size=4,

gradient_accumulation_steps=8,

num_train_epochs=3,

learning_rate=5e-6,

fp16=True,

)

trainer = Trainer(model=model, args=targs, train_dataset=train_data)

trainer.train()A pragmatic note: use vLLM for the teacher sampling. The speedup on long-context decoding is non-trivial and materially impacts the cost of data augmentation.

Results at a Glance

| Model | Avg. Zero‑shot | + SKD | + REVTHINK |

|---|---|---|---|

| Mistral‑7B | 50.6% | 56.3% | 63.2% |

| Gemma‑7B | 46.8% | 54.7% | 61.2% |

Across a suite of eight in-domain benchmarks covering commonsense, math, and logic, REVTHINK adds a significant 6–7 percentage points on top of standard symbolic knowledge distillation (SKD).

The efficiency gains are notable. With just 10% of the training data, the reverse-enhanced student outperforms a model trained on the full dataset using SKD alone-a valuable trade-off when teacher inference is the bottleneck.

The effect also scales. While gains diminish slightly on massive Mixture-of-Experts models (8 × 22B), the upshot is a 7B parameter model that punches above its weight. A REVTHINK-trained 7B model edges out a 176B baseline in zero-shot, achieving comparable performance without the corresponding computational and operational cost. Performance lifts also transfer to out-of-distribution tasks like BoolQ (+2%), OpenBookQA (+3%), and e-SNLI (+5%), suggesting the model is learning a deeper representation of logic rather than just surface-level patterns.

When Does It Help Most?

The gains are not uniform. The technique thrives on tasks with clear invertible structures, such as algebra, precalculus, and date arithmetic. The largest performance jumps were seen in moderately difficult problems (e.g., level-3 MATH), whereas domains like number theory, which are hard to invert, showed limited benefit.

This suggests reverse-thinking acts as a kind of cognitive scaffolding, most valuable where the model’s own parametric knowledge is thinnest or the logical path is clear. It provides a structured way to reinforce reasoning pathways that smaller models might otherwise struggle to form.

Practical Notes & Pitfalls

Execution demands a degree of intellectual hygiene. The quality of the augmented data is paramount; feeding the student inconsistent or flawed reasoning chains from the teacher is worse than useless-it’s actively detrimental. Rigorous filtering of the teacher’s outputs is not optional.

Furthermore, while the authors find that mixing REVTHINK with standard Answer-Augmentation yields additive gains, one should avoid relying solely on the backward-reasoning task (Q_b → R_b) for training, as this can induce a distribution shift that harms forward-reasoning capabilities. The multi-task objective is key. Finally, remember the goal: the backward reasoning is a training-time construct. Running it at inference defeats the purpose-the knowledge should already be baked in.

Limitations & Future Work

The approach is not a panacea. Distillation is not alchemy; it cannot turn a biased teacher into a wise student. Any toxicity or factual errors in the teacher model will propagate. Post-training alignment remains a necessity. The technique also delivers modest returns for domains lacking a clear reverse mapping.

The obvious extensions are intriguing. One could imagine multi-hop backward reasoning, where the reverse chain is recursively generated, or applying reinforcement learning with a “consistency critic” to reward the model for generating logically sound loops.

Closing Thoughts

REVTHINK is a stark reminder that scaling parameters is a brute-force approach. The more elegant path often lies in structuring the pedagogy-in being smarter about how we teach. Embedding the human habit of double-checking via inversion is both effective and economical.

If you’re fine-tuning a bespoke model for reasoning tasks and can afford a single pass of teacher-led data augmentation, this technique is well worth the investment. We are building more than just pattern-matchers; we’re teaching machines how to think. And sometimes, the most effective way forward is to teach them how to reason backward.