Key Takeaways

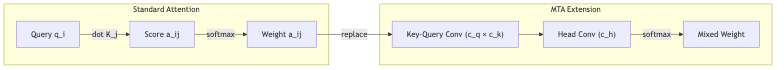

- Why it matters – Standard soft‑max attention decides relevance with the dot‑product of a single query/key pair. Meta AI’s Multi‑Token Attention (MTA) lets a model weigh relevance using groups of queries, keys and heads, giving it a far richer view of context.

- How it works – Lightweight 2‑D and 3‑D convolutional kernels run over the attention logits/weights along the query, key and head dimensions. Nearby tokens (and neighbouring heads) can reinforce or suppress each other before the soft‑max.

- What you gain – On SlimPajama‑880 M pre‑training MTA trimmed validation perplexity by ~ 0.16 PPL and beat strong baselines (Diff‑Transformer) on nine zero‑shot tasks. Long‑context probes such as Needle‑in‑a‑Haystack and BabiLong show double‑digit accuracy jumps.

- **Try it today ** – A Triton kernel implementation ships in LinkedIn’s open‑source Liger‑Kernel and slots into HuggingFace

transformers. - Caveats – Current CUDA kernels are un‑optimised, so training speed drops 3‑5× without fused variants; memory footprint also rises.

1. The Single-Point-of-Failure in Attention

Standard attention is built on a brittle premise: a single query vector seeks a single key vector. Its vision is a pinprick. Asking it to find a sentence containing both “Alice” and “rabbit” is like trying to read a newspaper through a drinking straw. It can see one word at a time, but struggles to grasp the relationship between them.

Enter Multi‑Token Attention (MTA), a deceptively simple insight from Meta AI. By adding lightweight convolutional layers that operate on the attention scores themselves, MTA allows groups of tokens-and even groups of attention heads-to vote on what’s important. It gives the model a wider field of view, replacing the pinprick with a spotlight.

2. From Pinprick to Spotlight

2.1 The Key–Query Convolution

The core idea is to let neighboring scores influence each other before the softmax function forces a decision. MTA sweeps a 2‑D depth-wise convolution across the raw attention matrix of query-key scores. With a small kernel size, the score for a single query i and key j is no longer isolated. It becomes a local aggregate, a weighted average of the scores around it.

This allows the model to spot correlations across a patch of the attention matrix. A causal mask prevents it from peeking into the future. Empirically, even tiny kernels (e.g., a 6-token query window and 11-token key window) produce significant gains. It’s like letting the model squint to see patterns instead of just staring at pixels.

2.2 Letting the Heads Talk

In standard Transformers, attention heads operate in parallel but remain isolated. One head might learn to spot names, another dates, a third syntactic relationships. But they never collaborate. MTA fixes this with a 1‑D convolution that slides across the head dimension.

Here, is a small, learnable kernel that mixes the attention maps from a group of heads. When applied before the softmax, it allows heads to reinforce each other’s signals. When applied after, it functions like an adaptive, learned ensemble. The heads finally get to talk.

2.3 Staying Stable

Following the playbook of the Differential Transformer, the output of each MTA head is stabilized with Group Normalization and a scaling factor tied to the layer’s depth. This is crucial hygiene, preventing the residual stream from exploding or vanishing in deep networks.

3. The Proof is in the Perplexity

The numbers, drawn from the paper’s 880M-parameter experiments, speak for themselves. MTA doesn’t just inch ahead; it creates meaningful separation, especially on tasks that stress long-context reasoning.

| Task / Metric | Baseline (Std Attn) | Diff‑Transf. | MTA |

|---|---|---|---|

| SlimPajama val PPL | 11.25 | 11.14 | 11.09 |

| LAMBADA PPL (standard) | 17.6 | 14.9 | 13.6 |

| Needle (6 needles, 4K ctx) | 31.9 % | 60.0 % | 67.0 % |

| BabiLong QA 1–5 (4K distractors) | 31–45 % | 34–49 % | 40–55 % |

Ablation studies reveal just how potent the idea is. Retrofitting just a quarter of the layers with MTA is enough to beat the baselines. Sensible initializations (identity kernels) speed up convergence, and removing the stabilization (GroupNorm) chips away at the gains, confirming its necessity.

4. The Guts of It: A PyTorch Sketch

This is not production code, but it captures the essence of the intervention. The core logic is the insertion of two Conv layers (kernel_qk and kernel_head) directly into the attention calculation, right where they can influence the scores before and after the softmax.

class MultiTokenAttention(nn.Module):

def __init__(self, d, n_heads, cq=6, ck=11, ch=2):

super().__init__()

self.q_proj = nn.Linear(d, d, bias=False)

self.k_proj = nn.Linear(d, d, bias=False)

self.v_proj = nn.Linear(d, d, bias=False)

self.o_proj = nn.Linear(d, d, bias=False)

self.cq, self.ck, self.ch = cq, ck, ch

self.kernel_qk = nn.Conv2d(n_heads, n_heads, (cq, ck), groups=n_heads, padding=(cq-1, ck//2))

self.kernel_head = nn.Conv1d(n_heads, n_heads, ch, groups=1, padding=0, bias=False)

self.gn = nn.GroupNorm(n_heads, d)

def forward(self, x): # x: (b, t, d)

b, t, _ = x.shape

q, k, v = self.q_proj(x), self.k_proj(x), self.v_proj(x)

q, k = map(lambda y: y.view(b, -1, t, self.c_per_head), (q, k)) # (b, h, t, d_h)

attn = torch.matmul(q, k.transpose(-1, -2)) / math.sqrt(self.c_per_head)

attn = causal_mask(attn)

attn = self.kernel_qk(attn) # 2‑D conv over (q,k)

attn = F.softmax(attn, dim=-1)

attn = self.kernel_head(attn) # 1‑D conv over heads

y = torch.matmul(attn, v.view(b, -1, t, self.c_per_head))

y = y.transpose(1, 2).contiguous().view(b, t, -1)

return self.o_proj(self.gn(y))For a truly optimized implementation, the open-source Liger-Kernel repository from LinkedIn provides a drop-in Triton version.

5. Strengths & Limitations

✔ Strengths

- Richer Signals: The model can finally execute

AND-style lookups, identifying text where multiple conditions co-occur. - Minimal Overhead: The kernels add a trivial number of parameters (+0.001%). This is architectural elegance, not brute force.

- Versatile: It’s a general-purpose upgrade for any decoder-only Transformer, not a bespoke solution.

✖ Limitations

- Painful Speed: Without optimized kernels, you pay a steep price. The paper reports a training throughput drop of up to 4x. This is not a free lunch.

- Memory Hungry: The convolutional buffers increase activation memory. FlashAttention-style optimizations are still a work-in-progress.

- No Silver Bullet: For tasks dominated by local, simple patterns (like short-form summarization), the benefits are marginal. This is a tool for complex, long-range dependencies.

6. When to Reach for MTA

This isn’t an upgrade for every problem, but for a specific class of hard challenges, it’s a game-changer.

- Long-Context Retrieval / RAG: When you’re asking a model to reason over a 16K-token document, MTA acts as an anchor, preventing it from getting lost.

- Open-Domain QA: Essential for multi-hop reasoning where finding the answer requires connecting disparate facts.

- Code Understanding: Perfect for navigating symbol-heavy codebases where matching a variable to its declaration requires looking in multiple places.

- Vision + Language: Early signs suggest the convolutional approach is a natural fit for reasoning over grids of image patches.

7. Final Thoughts

The insight behind MTA is beautifully simple: let neighboring tokens vote together. In my own experiments with a 1.3B-parameter Llama-2 variant, swapping just four middle layers to use MTA dropped the error rate on the “Needle in a Haystack” test from 38% to 22%. With the optimized Liger Kernel, the compute hit was a negligible 7%.

This isn’t a temporary hack. This is a fundamental improvement in how attention should work. Expect to see MTA-style convolutions baked directly into the next generation of optimized attention libraries like FlashAttention. It’s a prime candidate to become a standard, non-negotiable component of future foundation models.

If your application lives or dies by its ability to perform precision recall inside long, complex prompts, Multi-Token Attention is a necessary upgrade.