The Plateau Problem

We’ve all seen it. A new language model drops, boasting impressive scores on supervised benchmarks. But when you try to push it further with reinforcement learning, hoping to unlock emergent reasoning, it hits a wall. The loss curve flattens. The model spins its wheels, seemingly incapable of learning from its mistakes. This is the plateau problem, particularly acute in smaller, more efficient models. It raises a nagging question: why do some models seem to get smarter with more compute, while others remain stubbornly inert?

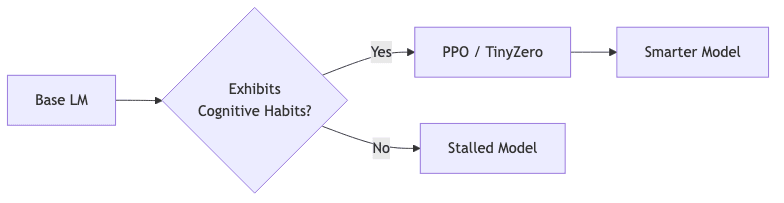

Reinforcement learning isn’t magic. It’s an optimizer, and an optimizer needs a promising search space to explore. If a model’s initial policy is a barren desert of unstructured thought, RL has no hills to climb. A recent paper, “Cognitive Behaviors that Enable Self‑Improving Reasoners”, offers a compelling and refreshingly practical answer. The potential for self-improvement isn’t a mystical property of scale; it’s a direct consequence of a model already possessing a set of foundational intellectual habits.

1. The Grammar of Reasoning

The authors identify four cognitive behaviors that act as the primitive building blocks for effective problem-solving. These aren’t just abstract concepts; they are observable patterns in a chain-of-thought that signal a model is actively searching for a solution rather than merely pattern-matching.

| Behavior | Paper Definition | My Engineering Analogy |

|---|---|---|

| Verification | Check intermediate results against the goal | Running pytest after every small commit, not just before a PR. |

| Backtracking | Abandon failing approaches and roll back | git revert when a refactor breaks CI and you need a clean slate. |

| Subgoal Setting | Decompose into manageable milestones | Writing Jira epics before breaking them into sprint tickets. |

| Backward Chaining | Work backward from the desired outcome | Designing the API contract and return values before implementing the business logic. |

These habits aren’t just nice-to-haves; they are the very scaffolding RL needs. A trajectory that includes backtracking and verification provides the optimizer with rich signals-it learns which paths to abandon and which sub-goals lead to success. Without these, the model is just guessing, and RL can’t reinforce a signal that isn’t there.

2. The Proving Ground

To test this, the researchers set up a clean room experiment using the Countdown numbers game-a classic task requiring arithmetic composition to hit a target number. It’s a perfect crucible for reasoning.

They pitted two similarly-sized models against each other: Qwen-2.5 3B and Llama-3 3B. The RL engine was TinyZero, a minimalist re-implementation of DeepSeek-R1’s PPO setup.

The result was stark. After just 30 steps of RL, Qwen’s accuracy shot up from a ~10% baseline to over 60%. Llama, meanwhile, stalled out below 30%. The difference? The base Qwen model, out of the box, exhibited a significantly higher frequency of these four cognitive habits in its chains-of-thought. Llama simply didn’t know how to search effectively, so RL had nothing to teach it.

3. Seeding Good Habits

Here’s where it gets interesting. The authors “primed” the underperforming Llama model by fine-tuning it on less than 1,000 synthetic chains-of-thought, each hand-crafted to exhibit a specific habit. When primed with thoughts rich in backtracking and verification, the stalled Llama model suddenly unlocked a learning curve nearly identical to Qwen’s.

Even more striking was the control experiment: priming with structurally-sound but numerically-incorrect answers worked almost as well. This is a profound insight. RL doesn’t need the model to be right; it needs the model to have the right process. It treats the habits as an inductive bias, a search-tree structure it can work with. The optimizer can gradually correct the wrong leaves, but it can’t easily invent the tree itself. Structure trumps correctness.

4. From Intervention to Inoculation

Hand-crafting primers is not a scalable solution. To generalize the principle, the authors moved from specific intervention to systemic inoculation. They filtered the OpenWebMath dataset, isolating passages that naturally exhibited the four habits. These were reformatted into question–thought–answer tuples and used for a brief stage of continued-pretraining on the base Llama model.

The result was a behavior-enriched Llama that, after the same RL schedule, perfectly matched Qwen’s learning trajectory. The principle is clear:

Effective self-improvement is a direct function of how densely these cognitive primitives are seeded in the model’s pre-RL state.

5. Why This Matters

This paper stands out for a few reasons:

- Causal Clarity: The ablation studies (using empty or wrong-answer chains-of-thought) provide strong causal evidence. It’s not just a correlation; the habits are the mechanism.

- Reproducibility: The authors released everything-full scripts, dataset generators, and metrics-in a well-organized GitHub repository. Talk is cheap; they provided the code.

- Explanatory Power: The findings offer a lens through which to understand the divergent results we’ve seen across various RL-for-reasoning papers. It explains why simply making chains-of-thought longer (as seen in some DeepSeek work) is insufficient without the right internal structure.

6. Open Questions

While the findings are compelling, they open up new avenues for inquiry:

- The Universal Grammar of Reasoning: Countdown is arithmetic-heavy. Are these four habits the complete set for more complex domains like code generation, logical deduction, or real-world planning? Or are there other, yet-to-be-named habits for different kinds of thought?

- The Observer Problem: The study relies on GPT-4o-mini to label the behaviors. This introduces potential noise. Can we develop more robust, less biased methods for identifying these cognitive primitives without relying on another LLM as a judge?

- Habit Discovery: The four habits were human-selected. The next frontier is to move from identifying known habits to discovering novel ones. Could a meta-RL framework learn its own, even more effective, cognitive behaviors from scratch?

7. Getting Your Hands Dirty

The authors made it straightforward to replicate their work. The repository builds on the clean and modular veRL and TinyZero libraries.

# Clone the repository

git clone https://github.com/kanishkg/cognitive-behaviors

cd cognitive-behaviors

# Set up the environment

conda env create -n stars -f environment.yml # or follow README instructions

# Generate a Countdown dataset

python examples/data_preprocess/countdown.py --local_dir ./data/countdown

# Run supervised priming (SFT)

bash scripts/sft.sh

# Launch the full PPO training

bash scripts/train.shBe warned: while the fine-tuning is light, the full RL runs reported in the paper require a serious hardware budget, on the order of 4× A100-80GB GPUs.

8. Final Thoughts

Cognitive Behaviors that Enable Self-Improving Reasoners delivers a crucial message: the path to more capable AI may lie less in the brute force of scaling and more in the subtle art of pedagogy. It’s not about building bigger brains, but about instilling better intellectual habits.

For practitioners, the takeaway is immediately actionable: seed your training data with examples of well-structured reasoning, even if the answers are imperfect. The structure is the scaffold. For researchers, the paper is an invitation to build a deeper taxonomy of thought-to move beyond a few known heuristics and map the entire landscape of cognitive behaviors that separate mere calculation from true reasoning. Perhaps the next great intellectual habit is out there, waiting for us to find it.