Key Takeaways

- DeepSeek-V3 fuses a 671 billion-parameter Mixture-of-Experts (MoE) architecture with FP8 precision, delivering throughput that frankly embarrasses dense models like Llama and Qwen.

- A clever trick called Multi-Head Latent Attention (MLA) slashes the memory hog that is the KV-cache, with no perceptible hit to model quality.

- Advanced training optimizations, including device-limited routing and three distinct balance losses, helped wrestle the pre-training cost down to an estimated $5.6 million.

- The full-precision beast needs eight NVIDIA H200 GPUs to run comfortably. But an INT4 AutoRound quantized version tames it for a single H100-equipped machine.

- Getting this monster to dance takes just a few lines of

vllm.LLMcode, which I’ll share below. With speculative decoding, you can push past 200 tokens per second on commodity clusters.

Why I’m Excited About DeepSeek-V3

After 18 months spent in the trenches, benchmarking the endless parade of open-source Large Language Models (LLMs), it takes a lot to genuinely surprise me. But nothing has so thoroughly recalibrated my sense of what “big yet efficient” can mean quite like DeepSeek-V3. This model, despite being nearly an order of magnitude larger by parameter count than heavyweights like Llama-3-70B and Qwen-2-72B, generates responses faster. The magic, of course, is its Mixture-of-Experts (MoE) architecture, where the vast majority of its 671 billion parameters lie dormant during any single forward pass-a brutal but effective lesson in computational leverage. This article is my deep dive into what makes DeepSeek-V3 tick, how you can actually run it on hardware that doesn’t require a nation-state’s budget, and why I believe it’s more than just another point on the leaderboard.

Anatomy of a Modern MoE: The DeepSeek-V3 Approach

At its core, a Mixture-of-Experts (MoE) model is a lesson in specialization. It routes input tokens to a small subset of “expert” sub-networks, meaning only a fraction of the model’s total parameters are engaged for any given token. This translates to faster inference and lower computational costs than a dense model of comparable scale. DeepSeek-V3 builds on this foundation with a few rather elegant architectural tricks.

DeepSeekMoE: Shared, Tiny, and Routed Experts

Traditional top-k MoE routing sends each token to the k experts that show the highest affinity. DeepSeekMoE refines this with a multi-layered approach:

- Shared experts: These act as a repository for generalized knowledge, accessible to all tokens to prevent siloing of common-sense reasoning.

- Tiny experts: Implemented as low-rank adapters, these are designed to mop up the simple, high-frequency patterns in the data with minimal overhead.

- Device-limited routing: A critical optimization for massive deployments. Each token’s selected experts are constrained to reside on at most M GPUs. This slashes cross-node communication overhead from O(N) to O(M), where N is the total number of GPUs in the cluster.

To prevent hotspots and maintain high utilization, the DeepSeek-V3 training regimen employs three separate auxiliary balance losses: one for experts, one for devices, and one for communication. It also uses device-level token dropping if a GPU’s processing queue gets overwhelmed, a pragmatic solution for system stability.

Multi-Head Latent Attention (MLA)

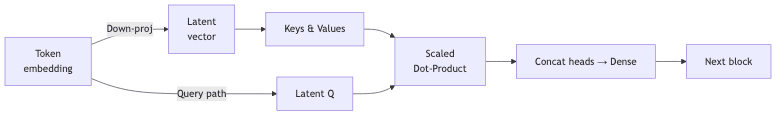

The KV (Key-Value) cache is the perennial memory hog in transformer models. DeepSeek-V3 attacks this with Multi-Head Latent Attention (MLA). This deceptively simple idea compresses the token embeddings into a lower-dimensional latent space before generating the queries, keys, and values. During inference, only these compressed latent tensors are stored in the KV cache. This crushes the memory footprint by a factor roughly proportional to the compression ratio c, all while maintaining accuracy comparable to classic Multi-Head Attention.

Here’s a simplified view of the MLA process:

Multi-Token Prediction (MTP)

Another piece of pragmatic engineering employed here is Multi-Token Prediction (MTP). Rather than myopically predicting only the next token, DeepSeek-V3 is trained to jointly predict d future tokens at each step, while rigorously preserving causality. The loss function is a simple average of the cross-entropy losses for each of the d predictions:

Where is the model’s

-th prediction for the token at time

, and

is the ground truth. While these specialized MTP blocks are disabled during standard inference to save compute, they aren’t dead weight. They can be repurposed for advanced techniques like speculative decoding, where a smaller, faster model (or these MTP heads) generates a draft sequence that is then verified in parallel by the main model, often yielding dramatic speedups.

Hardware Considerations: Crunching the Numbers

The key to making a giga-scale model even remotely tractable is brutal quantization. DeepSeek-V3 leverages FP8 (8-bit floating point) precision, which reduces the memory footprint of each parameter to a single byte. This gives us a simple rule of thumb: the required memory in gigabytes is roughly equal to the number of parameters in billions.

For DeepSeek-V3’s 671B parameters, that means we need about 671 GB for the FP8 weights alone. Here’s how the math breaks down across the usual high-end suspects:

| GPU Class | VRAM / GPU | GPUs for Full Model (FP8) | Notes |

|---|---|---|---|

| AMD MI300X | 192 GB | 4 (just) | Potentially cost-effective, but the software ecosystem remains a frontier. |

| NVIDIA H100 (80GB) | 80 GB | 9 (realistically 12-16 for overhead) | Typically requires two 8xH100 nodes, incurring inter-node latency. |

| NVIDIA H200 (141GB) | 141 GB | 5 (realistically 8) | The current sweet spot: fits comfortably on a single 8xH200 node. |

| NVIDIA H100 (INT4 quant) | 80 GB | 1 (via 8xH100 FP8 setup initially) | Quantized model (e.g., using AutoRound) can run on a single H100. |

A quick note on economics: On RunPod, an 8×H200 instance runs about $32 h⁻¹ (as of Jan 2025). Buying the rig outright ($260k) only pencils out after ~1 year of running it at near-full tilt.

Hands-On: Running DeepSeek-V3 with vLLM

Getting this thing to run is, against all odds, not a Herculean task, thanks to excellent libraries like vLLM. Below is the minimal Python code for an 8xH200 server, along with variations for a dual-node H100 setup and the leaner INT4 quantized model.

You can find the model on Hugging Face: deepseek-ai/DeepSeek-V3.

# Single node, 8xH200 GPUs (FP8 model)

from vllm import LLM, SamplingParams

# Example prompt

prompts = [

{"role": "system", "content": "You are a helpful and creative assistant."},

{"role": "user", "content": "Explain the concept of Mixture-of-Experts in LLMs to a non-technical person."}

]

# Initialize the LLM with vLLM

llm = LLM(

model="deepseek-ai/DeepSeek-V3", # Or the specific Hugging Face model ID

max_model_len=8192, # Max sequence length

tensor_parallel_size=8 # Parallelism across 8 GPUs on the same node

)

# Generate text

outputs = llm.chat(prompts, SamplingParams(temperature=0.7, max_tokens=512))

print(outputs[0].outputs[0].text)For a cluster with two nodes, each with 8 H100 GPUs (16 total), you add pipeline parallelism to the mix:

# Two nodes, 8xH100 GPUs each (FP8 model)

from vllm import LLM, SamplingParams # Assuming vllm is installed and configured across nodes

llm = LLM(

model="deepseek-ai/DeepSeek-V3",

max_model_len=8192,

tensor_parallel_size=8, # Intra-node tensor parallelism

pipeline_parallel_size=2 # Inter-node pipeline parallelism

)

# ... rest of the chat/generation codeIf you’re operating on a tighter budget, an INT4 quantized version is your best bet. Here’s an example using a GPT-Q model from the OPEA on Hugging Face:

# Single node, 8xH100 GPUs (INT4 quantized model)

from vllm import LLM, SamplingParams

llm = LLM(

model="OPEA/DeepSeek-V3-int4-sym-gptq-inc", # Example path to a GPT-Q quantized model

max_model_len=8192,

tensor_parallel_size=8 # Adjust based on how the model was quantized and sharded

)

# ... rest of the chat/generation codeThe results are, frankly, compelling:

- FP8 on 8xH200: I consistently see around 130 tokens per second with a sampling temperature of 0.7.

- INT4 on 8xH100: This setup can breach 200 tokens per second, with output quality that remains startlingly close to the FP8 version for most tasks.

Performance Enhancement Tips

To wring every last drop of performance from your deployment, consider these moves:

- KV-Cache Offloading: MLA helps, but it isn’t magic. For truly absurd sequence lengths or high batch sizes, offloading the cache to NVMe becomes your escape hatch. In vLLM, you can enable this with a flag like

--swap-space 32(allocating 32 GiB of swap on NVMe). - Speculative Decoding: This is where things get interesting. Use the INT4 quantized DeepSeek-V3 as the “draft” model and the FP8 version as the “verifier.” I’ve seen this brute-force-and-verify approach deliver a 1.7x speedup in practice. It’s not free, but it’s effective.

- Sequence Parallelism: When you’re pushing context windows into the stratosphere (e.g., well past 16k tokens), sequence parallelism (enabled with a flag like

--max-parallel-loading-workers 2) helps distribute the activation memory more effectively across your silicon.

The Economics of Giga-Scale Pre-training

Let’s talk about the price of admission. DeepSeek-AI reports that pre-training DeepSeek-V3 consumed approximately 2.788 million GPU-hours. The breakdown is telling:

- Initial pre-training (1T tokens, FP8): ~1.8 million H800-equivalent hours. (That’s about 37 days on a 2048-GPU cluster of NVIDIA H800s).

- Long-context extension: ~119,000 H800-equivalent hours.

- Post-training alignment (RLHF): ~5,000 H800-equivalent hours.

With a conservative cloud cost of $2 per H800-equivalent GPU-hour, the total pre-training bill lands around $5.6 million. For a 671-billion parameter model, that figure is surprisingly low. It’s a testament to the brutal efficiency of their training stack, particularly the sophisticated balance-loss mechanisms and dynamic token-dropping that squeeze every last FLOP out of the hardware.

Final Thoughts: Embracing the MoE Era

DeepSeek-V3 is an object lesson in architectural leverage. With the right hardware, or a well-managed cloud budget, it’s now possible for smaller shops to experiment with an FP8 behemoth, quantize it for even wider access, or cannibalize its components like the MTP heads for advanced inference strategies.

The real lesson here is the triumph of the MoE paradigm: you pay for intelligence only when you need it. This efficiency is what will unlock the next wave of truly capable, deployable models.

Happy hacking. If you experiment with DeepSeek-V3 or have your own war stories from running giga-scale MoEs, connect with me on X (Twitter) or LinkedIn.