Introduction

Large Language Models are gods of silicon, and they demand a sacrifice: GPU memory. An unending, voracious appetite for VRAM is the price of admission to this new era. My own crusade over the last year has been to find a way to starve these gods without killing them. The art of this starvation is weight-only quantization-a technique for shrinking models by compressing their parameters, leaving their activations and hidden states untouched.

I’ve tested nearly every public algorithm to cross my screen: the classic, calibration-heavy GPTQ; the beautifully simple, calibration-free bitsandbytes; and the computationally costly, data-driven AQLM. My current weapon of choice, the one that strikes the best compromise between pragmatism and performance, is Half-Quadratic Quantization (HQQ). It delivers near-GPTQ accuracy, runs in minutes, and asks for no calibration data. It just works.

This article is about pushing HQQ to its logical, brutal extreme. It seeks answers to two questions:

- Can Llama 3 survive being shackled to a 1-bit, binary existence?

- Can the HQQ+ recipe-quantize first, then graft on a LoRA adapter-breathe enough life back into the compressed model for it to be useful?

Why this matters: Binary and 2-bit quantization are the final frontier of model compression. If we can make them work, truly massive models become viable on consumer hardware. The speed and simplicity of HQQ make it the ideal tool for this kind of rapid, aggressive experimentation.

All work was done on a single NVIDIA L4 (24 GB) via Google Colab. The gods of silicon can, it seems, be tamed on a budget.

1 | The Brutal Calculus of Quantization

1.1 Weight-Only Quantization

The core idea is a violent act of compression. Given a weight matrix of floating-point numbers and a target precision of

bits, we seek an integer matrix

and scale/offset parameters

such that our new, compressed weight matrix

is as close to the original as possible.

The central philosophical divide is how you measure “close.” Calibration-based methods like GPTQ, AWQ, and AQLM use a small dataset to minimize the error on the model’s output. Data-free methods like bitsandbytes and HQQ are purists; they minimize the error on the weights themselves.

1.2 Half-Quadratic Quantization (HQQ)

HQQ frames this as a clean optimization problem:

It solves this with a half-quadratic alternating optimization-an elegant mathematical dance that converges in a few iterations, runs entirely in half-precision, and handles outliers gracefully.

The result? Llama 3 70B quantizes to 1-bit in about 22 minutes on a single L4. That’s a huge improvement over GPTQ imho.

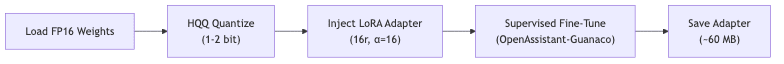

1.3 HQQ+: Resurrection by Adapter

Pushing a model to 1-bit or 2-bit inevitably causes damage. Nuance is lost. The model’s soul degrades. The HQQ+ recipe is the act of resurrection: we fine-tune a lightweight LoRA adapter on top of the frozen, quantized backbone. This is the same trick as QLoRA, but applied to a far more radically compressed base.

Why this works: The quantized backbone provides a compressed, low-fidelity map of the world. The LoRA adapter then learns to correct its errors and restore the lost expressivity, adding only a few dozen megabytes of new parameters. It’s a graft of new life onto a compressed skeleton.

2 | The Code

Quantizing & Loading

from transformers import AutoModelForCausalLM, AutoTokenizer, HqqConfig

model_id = "meta-llama/Meta-Llama-3-8B"

quant_cfg = HqqConfig(nbits=1, group_size=32,

quant_zero=False, quant_scale=False, axis=0)

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=True)

tokenizer.pad_token = tokenizer.eos_token

model = AutoModelForCausalLM.from_pretrained(

model_id,

device_map="cuda",

torch_dtype="bfloat16",

quantization_config=quant_cfg

)Preparing for LoRA Fine-Tuning

from peft import LoraConfig, prepare_model_for_kbit_training

from trl import SFTTrainer, SFTConfig

from datasets import load_dataset

# LoRA injected in attention + FFN projections

peft_cfg = LoraConfig(r=16, lora_alpha=16, lora_dropout=0.05,

target_modules=["q_proj","k_proj","v_proj",

"o_proj","gate_proj",

"down_proj","up_proj"])

model = prepare_model_for_kbit_training(model)

ds = load_dataset("timdettmers/openassistant-guanaco")

def add_eos(row): row["text"] = row["text"] + "<|end_of_text|>"; return row

ds = ds.map(add_eos, num_proc=8)

train_cfg = SFTConfig(

output_dir="./llama3_8b_hqq1bit_adapter",

per_device_train_batch_size=8,

gradient_accumulation_steps=4,

num_train_epochs=3,

eval_steps=100,

learning_rate=1e-4,

optim="paged_adamw_8bit"

)

trainer = SFTTrainer(model=model,

tokenizer=tokenizer,

train_dataset=ds["train"],

eval_dataset=ds["test"],

peft_config=peft_cfg,

args=train_cfg)

trainer.train()3 | Results & Dissection

3.1 The Flow

- Velocity: 1-bit quantization of Llama 3 8B took a mere 1 minute 7 seconds. The 70B model finished in 22 minutes 21 seconds.

- The Grind: The 8B models trained in about 8 hours. The 70B variant, needing heavy gradient accumulation, completed a single epoch in 18 hours.

3.2 Perplexity & Quality

| Model | Validation PPL | Sample Prompt Output |

|---|---|---|

| 8B 1-bit gs=64 | 124 ± 3 | Digital gibberish (“dirdirdird…”) |

| 8B 1-bit gs=32 | 58 | Short, repetitive English (“I’m sorry for you…”) |

| 8B 2-bit gs=64 | 11 | Fluent recipe suggestions |

| 8B 2-bit gs=32 | 9 | Fluent, relevant; on par with 4-bit QLoRA |

| 70B 1-bit gs=64 | 43 | Syntactically valid but semantically hollow |

Observation 1 – Binary is a Brutal Abstraction. The act of collapsing billions of parameters into a stark choice between 1 and 0 is an act of violence against nuance. For the 8B model, the language signal wasn’t just degraded; it was shredded. The LoRA adapter could only partially piece it back together. What remained was the digital equivalent of speaking in tongues.

Observation 2 – 2-bit is the Pragmatic Frontier. A 2-bit Llama 3 8B, resurrected with a LoRA adapter, produces answers nearly indistinguishable from its full-precision parent. And it does so while fitting into 5-6 GB of VRAM-small enough for an RTX 3060 12 GB. This is the sweet spot.

Observation 3 – The Price of Precision. Halving the group_size from 64 to 32 adds about 0.4 GB to the model’s weight but buys you a dramatic drop in perplexity for 1-bit and a solid boost for 2-bit. Precision has a cost, but it’s often a price worth paying.

3.3 Why 1-bit Fails

In binary quantization, a single scale and offset pair must stretch an entire distribution of weights across just two possible values: . The optimal solution inevitably shoves most weights to the extremes, destroying the fine-grained relationships that encode meaning. Larger models like the 70B can absorb some of this damage through sheer parameter redundancy. Smaller models simply collapse.

This is not a failure of HQQ, but a fundamental limit of the abstraction. The only way around it, as AQLM demonstrates, is to learn the quantizers with back-propagation-a process that costs days of GPU time. For rapid iteration, HQQ’s elegant failure is more informative.

4 | A Practical Playbook

- Know Your Battleground. For generative tasks, 2-bit is the pragmatic minimum where coherence lives. Reserve 1-bit for academic curiosity or tasks like classification where a model’s soul isn’t required.

- Pay for Precision. A

group_sizeof 32 consistently delivers the best quality. Use 64 only if every last megabyte is sacred. - Chain the Beast. Freeze the quantized backbone with

prepare_model_for_kbit_training. Force the gradients to flow only where they’re needed: the LoRA layers. - Mind the Cache. Quantizing weights does not shrink the KV-cache. An 8K context window will still consume a vast amount of RAM.

- Travel Light. Once trained, the LoRA adapter is all you need. It’s a tiny file (around 60 MB), perfect for shipping.

5 | Foundational Texts

- HQQ: Half-Quadratic Quantization of Large Language Models (Kerem et al., 2024)

- QLoRA: Efficient Finetuning of Quantized LLMs (Dettmers et al., 2023)

- AQLM: Additive Quantization of Language Models (Ghosh et al., 2024)

- Hugging Face

transformers,peft, andbitsandbytesdocumentation. - Meta AI Llama 3 technical report (April 2024).

Conclusion

HQQ offers a rare and powerful combination: velocity, simplicity, and open availability. My experiments confirm that:

- A 2-bit HQQ model with a LoRA adapter can shrink Llama 3 8B to a ~5 GB footprint with almost no perceptible loss in quality. This is the new baseline for commodity hardware.

- 1-bit remains a research frontier: a fascinating glimpse into the limits of compression, but not yet ready for coherent conversation.

- A single, mid-range GPU can now quantize, fine-tune, and serve a genuinely useful LLM, end-to-end, in under a day for the cost of a few Colab credits.

The goal of putting a capable assistant on every machine is no longer a distant dream. Binary dreams may one day come true, but for now, 2-bit is the language they speak.

Questions, corrections, or ideas? Find me on X (@ra_kalra).