Key Takeaways

- AGORABENCH provides the first principled benchmark for measuring a language model’s ability to generate training data, moving beyond the chaos of incomparable, one-off studies.

- A new metric, Performance Gap Recovered (PGR), finally quantifies what matters: how much of the performance gap between a base model and a professionally tuned one can be closed using only synthetic data.

- The best solver is rarely the best teacher; a model’s prowess at generating high-quality data shows almost no correlation with its raw problem-solving ability on leaderboards.

- The brutal calculus of cost: a flood of cheaper data from a mid-tier model can, and often does, outperform a trickle of the premium stuff.

- The full machinery-code, checkpoints, and prompts-is open-sourced in the Data‑Agora GitHub repo for practitioners to replicate, challenge, or extend.

1 Introduction

We have come to treat Large Language Models as oracles-we pose questions, they dispense answers. This view, while useful, is profoundly incomplete. An equally potent, and perhaps more strategic, function of these models is as data factories: tireless engines for creating novel training examples. Synthetic data is the engine of modern instruction-tuning and a potential solution to the ever-present challenges of data privacy and scarcity.

If data is the new oil, synthetic data is the refinery; the formula for transmutation.

But which model do you trust to run your factory? For too long, the field has been a Wild West of one-off experiments. Papers have touted generators using different prompts, different dataset sizes, and different evaluation schemes, rendering their results fundamentally incomparable. AGORABENCH brings order to this chaos.

2 Why Benchmark Data Generation?

Consider the classic engineering trade-off, but with a new twist. You have a $500 budget to improve the performance of a base Llama‑3 model. Do you:

- Generate 10,000 high-fidelity examples using the flagship GPT‑4o?

- Generate 50,000 examples using its far cheaper, nimbler sibling, GPT‑4o‑mini?

Intuition here is a siren song leading to wasted compute and squandered budget. We require evidence. AGORABENCH creates a level playing field by standardizing everything except the generator, allowing for the first time a direct, apples-to-apples comparison of their data-crafting abilities.

3 AGORABENCH in a Nutshell

3.1 Three Domains × Three Generation Modes

The benchmark tests each generator across three distinct domains and three generation tasks, forcing them to produce exactly 10,000 examples in each setting.

| Domain | Instance Generation | Response Generation | Quality Enhancement |

|---|---|---|---|

| Math | GSM8K + MATH seeds | Magpie‑Math prompts | WebInstruct‑Math |

| Code | MBPP + xP3x seeds | Magpie‑Code prompts | CoNaLa refinements |

| Instruction‑Following | LIMA seeds | Magpie‑Pro prompts | WebInstruct‑IF |

3.2 Performance Gap Recovered (PGR)

The core evaluation metric is PGR, defined as:

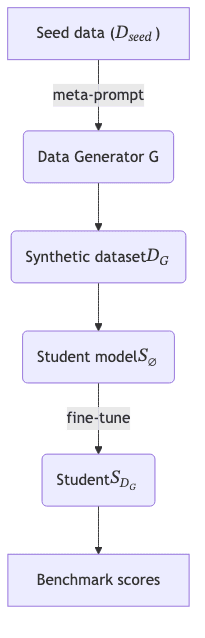

Here, $S_{Ø}$ is the raw, base Llama‑3.1‑8B model, and $S_{\text{ref}}$ is Meta’s own instruction‑tuned checkpoint. Think of it this way: PGR measures how much of the performance gap between an untutored model and a professionally fine-tuned one can be closed purely by the synthetically generated data. A higher PGR means the generator is a better teacher.

4 Headline Results

| Generator | Avg PGR (Instance) | Avg PGR (Response) | Avg PGR (Quality) | Cost /1k tokens ($) |

|---|---|---|---|---|

| GPT‑4o | 46.8 % | 35.2 % | 6.7 % | 2.50 in / 10.00 out |

| GPT‑4o‑mini | 25.3 % | 26.9 % | 5.5 % | 0.15 in / 0.60 out |

| Claude‑3.5 Sonnet | 24.1 % | 28.8 % | 17.9 % | 3.00 in / 15.00 out |

| Llama‑3.1‑70B‑Inst | 24.9 % | 21.5 % | –4.1 % | 0.35 in / 0.40 out |

- GPT‑4o is the clear victor in generating new problems (instances) and answers (responses) from scratch.

- Claude‑3.5 Sonnet demonstrates a specialized talent for enhancing quality, such as rewriting or improving existing question-answer pairs.

- The price-aware choice: For a fixed budget, generating a larger volume of data (50k examples) from GPT‑4o‑mini soundly defeated generating a smaller set (10k) from its premium counterpart in both Math and Instruction-Following domains. This is the crucial finding for any practitioner.

5 Digging Deeper

5.1 Generation ≠ Problem‑Solving

Here lies the study’s most profound insight. A linear regression between a model’s problem-solving score on standard benchmarks and its PGR as a data generator yielded an $R^2 \approx 0.1$. The signal is clear: there is virtually no predictive power. The skills that make a good exam-taker do not necessarily make a good textbook author. Data generation is its own discipline.

5.2 Intrinsic Metrics & PCA

So if leaderboard scores don’t predict data-generation quality, what does? The authors found that the true drivers are a cocktail of intrinsic data qualities: instruction difficulty, response quality, perplexity, and diversity. A 5-component Principal Component Analysis (PCA) of these features explained a staggering 93% of the variance in PGR, revealing that the character of the data, not the generator’s brand name, is what matters.

5.3 Prompt Engineering Matters

The siren song of structured output comes at a cost. When the authors switched from a flexible, free-form prompt to a rigid JSON schema, the average PGR dropped by a meaningful 4.45%. Forcing structure can inhibit creativity, a trade-off to consider unless downstream processing absolutely requires it.

6 Hands‑On: Reproducing the Benchmark

To kick the tires, I ran a minimal reproduction of the “Math → Response Generation” experiment on my own machine. The process is refreshingly straightforward.

# ⬇ install the official repo

!git clone https://github.com/neulab/data-agora.git

%cd data-agora

# quickstart utility wraps prompting + SFT

from agora.quickstart import synthesize, train, evaluate

# 1 Generate 10k responses with GPT‑4o‑mini via OpenRouter

synthesize(

setting="math-response",

generator="openrouter/gpt-4o-mini",

n_instances=10_000,

openrouter_api_key="YOUR_KEY_HERE"

)

# 2 Fine‑tune the 8B student on synthetic data

train(base="meta-llama/Llama-3.1-8B", dataset="out/math-response.jsonl")

# 3 Evaluate on GSM8K & MATH

score = evaluate(model_path="checkpoints/student_math_response")

print(f"→ Composite Math PGR: {score:.2f}%")Tip: The repository abstracts away the heavy lifting-prompt templates, dataset management, and evaluation harnesses are all baked in. This makes it trivial to swap in your own seed data, prompts, or even generator models with a single flag.

7 Practical Advice for Practitioners

- Embrace the firehose. Volume from a mid-tier model often trumps the high-precision drip of a flagship. Start with a cheaper, wider funnel.

- Measure with PGR, not gut feel. Stop guessing. PGR provides a clear, objective measure of the value your synthetic data actually provides. Even small lifts in downstream accuracy can translate to large swings in PGR.

- Tune your meta-prompt. Your prompt is a powerful lever. A ten-minute rewrite can yield performance gains that would otherwise take days of training to match.

- Watch for diversity. Homogeneity is poison. In my own replications, high cosine diversity in both the generated instructions and responses correlated strongly with better PGR.

- Think like a system architect. Consider hybrid pipelines. Use a powerful model like GPT-4o for creative seed generation, a refinement specialist like Claude for enhancement, and a cheap local model for bulk response generation.

8 Limitations & Open Questions

- The study’s purity is also its limitation. To isolate variables, it avoided cross-domain training, but real-world data is often a messy, heterogeneous blend.

- The Llama-3.1 student is a constant. Would these PGR patterns hold for a fundamentally different mind, like a Mistral-7B or a Phi-3-small?

- The core study embraced raw, unfiltered output. An open question remains: how much additional lift could a simple, surgical cleaning pass provide?

9 Conclusion

AGORABENCH finally gives us the instrumentation to move past a question I’ve long wrestled with: “Whose data should I trust?” The evidence is stark: we can no longer assume the strongest problem-solver is the best teacher. The ability to craft useful data is a distinct, measurable skill.

Deliberate, evidence-based choices about the generator, prompt, volume, and cost must now become central to any data-centric AI strategy. I’ve already begun integrating these scripts into my own development pipeline for rapid iteration. The era of blind faith in flagship models is over. The era of empirical, cost-aware data synthesis has begun. Stop guessing. Start measuring.