Key Takeaways (The Seductive Lie of Long Context)

- The Problem– We’re sold on the magic of 128 K context windows. Marketing decks showcase multi‑document reasoning, and codebases boast of their vast capacity. Yet, the brutal reality is that most open‑source LLMs start going blind long before the finish line. Empirically, they stumble beyond half of their advertised context because the distant position indices are statistical ghosts they’ve barely encountered during training.

- The Root Cause– The culprit is a simple, predictable flaw in the data diet. Pre‑training corpora create a pathologically left‑skewed position‑frequency distribution. Positions near the start of a sequence are seen constantly; positions out in the tail are severely under‑represented. The model simply hasn’t done the reps where it counts.

- The Solution– STRING (ShifTed RotRay positIon embeddinG) is a clever, almost brazenly pragmatic hack. Instead of the brute‑force expense of re‑training, it performs microsurgery on the position matrix at inference time, re‑mapping the well‑trained, high‑frequency positions to overwrite the under‑trained tail. No fine‑tuning required. No nonsense.

- The Impact – This isn’t a marginal gain; it’s a leapfrog. We’re seeing plug‑and‑play improvements of +10 → 30 points on rigorous benchmarks like RULER & InfiniteBench. A Llama 3 70B armed with STRING demonstrably beats GPT‑4‑128 K on long‑context suites.

- The Cost – A rounding error. ≤ 5 GB of extra VRAM and an overhead of < 0.3 s/token compared to vanilla FlashAttention‑2. The ROI is staggering.

1 Why Long Context ≠ Effective Context

The promise of large context windows is seductive. We imagine feeding entire codebases or research papers to a model and getting back nuanced, holistic insights. Yet, when I put this to the test and drop four “needles” across a 128 K document, a vanilla Llama 3‑8B (using RoPE) starts failing at around the ≈ 90 K token mark. The empirical truth is that most open LLMs effectively exploit ≤ 50 % of their advertised training length.

1.1 The Hidden Skew

The architecture itself contains the blueprint for this failure. For a training length , Rotary Position Embedding (RoPE) defines a relative-position matrix that is inherently front-loaded.

Let’s look at the structure. It’s a Toeplitz matrix:

The frequency of any given relative distance , described by

, declines linearly. A distance of 1 appears constantly; a distance of

appears once. This is before we even consider the data. Real-world text corpora exacerbate this; sequence lengths tend to follow Zipf-like distributions, meaning positions out in the far tail (beyond the first third of the context) might appear < 5 % of the time. The model is effectively blind out there because it’s never been properly trained to see.

2 Probing the Frequency–Ability Link

To confirm this suspicion, I trained two 1.3 B‑parameter TinyLlama variants on the SlimPajama‑627 B dataset, manipulating only the training window and total token exposure:

| Train window | Tokens spent for 1.4 K effective length |

|---|---|

| 4 K | 400 B |

| 2 K | 1 T |

When you plot the effective context length against the position frequency, both curves collapse onto each other. The conclusion is inescapable: frequency of exposure, not the nominal window size, is the true predictor of context utilisation. It’s not about the size of the playground; it’s about how much of it the model has actually played in.

3 Introducing STRING

STRING sidesteps the prohibitively expensive path of re‑training and instead performs targeted surgery on the position matrix at inference.

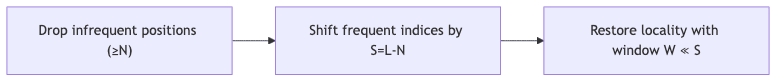

3.1 Three Simple Steps

- Drop: Discard the tail-end indices

, the ones the model barely knows.

- Shift: Remap the remaining, well-trained indices down to the bottom-left by a factor of

.

- Restore locality: Reintroduce a small, local attention window

(typically 32–128) to ensure adjacent tokens can still see each other clearly.

3.2 FlashAttention‑2 Implementation

Applying this is a one-liner.

# Adapted from HKUNLP/STRING

from string_for_llama import apply_string

model = AutoModelForCausalLM.from_pretrained("meta-llama/Meta-Llama-3-8B")

model = apply_string(model, shift_ratio=1/3, window=128) # one‑liner!Under the hood, STRING elegantly composes two attention kernels:

- A standard sliding‑window attention kernel focused on the diagonal for local context.

- A shifted self‑attention mechanism for the bottom‑left triangle, which uses the newly remapped RoPE identifiers.

The merged output is fully causal and maintains compatibility with the existing KV‑cache structure. It’s a clean, efficient fix.

4 The Payoff: Quantifying the Gains

So, how much does this pragmatic surgery actually help? The results are not subtle.

4.1 Needle‑in‑a‑Haystack (4‑Needle)

| Model (train L) | RoPE | STRING |

|---|---|---|

| TinyLlama‑1.3B (2 K) | 56.6 | 84.6 |

| LWM‑7B (32 K) | 31.8 | 50.4 |

| Llama 3‑8B (128 K) | 66.0 | 95.2 |

4.2 RULER @ 128 K

STRING elevates Llama 3 70B from a mediocre 66.6 → 81.7 and catapults Qwen 2 72B from 53.7 → 84.6. This isn’t just an improvement; it leap‑frogs GPT‑4‑128 K on a benchmark designed specifically to test this capability.

4.3 InfiniteBench Highlights

On the InfiniteBench suite, Llama 3 70B + STRING jumps from 56.9 → 67 % on average, with a particularly impressive +12‑point gain on long‑context multi‑choice reasoning.

5 Efficiency Matters

Pragmatism demands efficiency. Benchmarks on an A100‑80G GPU show the cost is minimal:

- Time: An extra ~0.2 s/token at a 128 K context length.

- Memory: A peak increase of 4–5 GB.

For the vast majority of production inference pipelines, this delta is a rounding error, especially when weighed against the astronomical cost of fine‑tuning or re‑training for better long-context performance.

6 Tuning Guidelines

A word of advice from the trenches. The defaults are sensible, but if you need to tune:

| Symbol | Default | Rationale |

|---|---|---|

| Overwrite the ≥ 33 % tail; gains plateau after |

||

| 128 | Restores local fluency; critically, must have |

Tip– If your model still shows degradation near the very end of its context, the answer is to increase the shift (S), not the local window (W).

7 STRING vs. Extrapolation Parlor Tricks

This is not another extrapolation gimmick. Methods like NTK‑aware RoPE, YaRN, ReRoPE, Self‑Extend, or DCA all focus on stretching or repeating positional information to push a model beyond its training length. They are essentially interpolation tricks.

STRING does the opposite: it optimises performance within the trained length by intelligently re-using the most robust parts of the model’s knowledge. This is why it avoids the degradation on short-context tasks that plagues extrapolation methods and, crucially, preserves the original KV‑cache size.

8 Getting Started

Putting this to work is trivial.

pip install git+https://github.com/HKUNLP/STRING.gitfrom transformers import AutoTokenizer, AutoModelForCausalLM

from string_for_llama import apply_string

tok = AutoTokenizer.from_pretrained("meta-llama/Meta-Llama-3-8B")

mdl = AutoModelForCausalLM.from_pretrained("meta-llama/Meta-Llama-3-8B", torch_dtype="auto")

# Patch the model with a 50% shift and a 64-token local window

mdl = apply_string(mdl, shift_ratio=0.5, window=64)

# Ready to go

resp = mdl.generate(**tok("...your 100K‑token prompt...", return_tensors="pt"))

print(tok.decode(resp[0]))The implementation also provides custom CUDA kernels, making it fully compatible with vLLM 0.4+ for high-throughput inference.

9 Limitations & Future Work

No solution is a silver bullet. Intellectual honesty requires acknowledging the boundaries:

- Training‑side Fixes – The truly elegant solution is to fix the problem at the source. Re‑balancing position frequencies during pre‑training is a promising but still under‑explored frontier.

- Beyond RoPE – STRING is designed for rotary embeddings. Models built on absolute or ALiBi‑style position embeddings will require their own analogous tricks.

- Extreme Windows – While effective up to 256 K, empirical evidence for performance at even longer contexts is still sparse.

I’m excited to see how future open‑weight models will eventually train away this skew by design. But until that day comes, STRING is my go‑to, battle‑tested patch for any production workload that needs to reliably read more than 50 K tokens.