The Catechism of Obedience

The catechism for building a useful chatbot is simple: instruction tuning. We take a raw, pretrained language model (LM) and feed it a painstaking diet of {instruction, response} tuples. Through this ritual, we coax it from being a mere text-completion engine into a helpful, obedient assistant. This has been the accepted wisdom, the bedrock of my own work adapting open-weight LLMs. The goal is explicit: teach the model to map a query to a desired output.

But a new paper from Hewitt et al., “Instruction Following without Instruction Tuning,” lands like a thrown gauntlet, challenging this entire paradigm. It suggests that our meticulous efforts might be just one path up the mountain, and perhaps not even the most direct one. The authors demonstrate that models can learn to follow instructions implicitly, without ever seeing an explicit instruction during the finetuning process. This forces a recalibration of how we think about model specialization, alignment, and safety.

The key findings are almost counter-intuitive:

- Finetuning on responses only-with no corresponding instructions-is surprisingly effective, achieving nearly half the performance of a fully instruction-tuned model.

- Training on a single, narrow task like poetry or Python coding still grants the model general instruction-following abilities on completely unrelated topics.

- A trivial, hand-crafted three-rule adapter can nudge a base model towards chatbot-like behavior, no training data required.

This suggests that specialized models may inadvertently become generalists. The beast we think we are caging is, in fact, learning to pick the lock on its own.

Why Does Instruction Tuning Work?

The classic approach to instruction tuning involves minimizing the conditional cross-entropy over a dataset of pairs:

Here, $x$ is the instruction, $y$ is the desired response, and the optimization process nudges the model’s parameters $\theta$ to prefer helpful completions over rote continuations.

But what happens if we remove $x$ from the equation? Or if we replace the diverse set of responses $y$ with text from a single, narrow domain? The prevailing assumption has been that the crucial instruction-following link would be severed. Hewitt et al. prove this assumption wrong.

Experimental Setup

The authors’ methodology is clean and direct, providing a solid foundation for their claims.

- Base models: Llama‑2‑7B and OLMo‑7B (Feb 2024 snapshot).

- Classic baseline: Full instruction tuning on the LIMA dataset (1,030 diverse instruction-response pairs).

- Evaluator: AlpacaEval 2, which conducts head‑to‑head comparisons against a reference model, controlling for response length.

- Decoding: Greedy decoding is used throughout to expose the model’s raw, intrinsic probabilities, removing the stochasticity of sampling.

Datasets for Single‑Task Finetuning

To test the bounds of this implicit learning, they used several narrow, single-domain datasets:

| Dataset | Size | Modality |

|---|---|---|

| MBPP | 374 | English ➜ Python code |

| GSM‑8K subset | 1,000 | Math word‑problem chains |

| Poetry | 571 | Famous poems |

| Recipes | 1,000 | Ingredient list + steps |

| Chess PGN | 1,000 | Move sequences |

1 – Response Tuning

The first experiment is the most audacious: “What if we remove the instructions and only teach the model what good answers look like?”

This “response tuning” method involves finetuning the base LM only on the response texts from the LIMA dataset, completely omitting the instructions. The model never sees a prompt. And yet, when presented with fresh prompts at inference time, it obeys.

Win‑rate vs instruction‑tuned (AlpacaEval)

Llama‑2‑7B : 43.3 % (base: 2.4 %)

OLMo‑7B : 43.7 % (base: 4.7 %)A model trained on answers alone gets almost halfway to the performance of a fully instruction-tuned model. How?

The Intuition: Unveiling Latent Capabilities

The capability was likely there all along. Pretrained LMs already possess a response-ranking ability. For a given instruction $x$, they are more likely to assign a higher probability to a correct, relevant response $y$ than to an unrelated one $y’$:

The problem is that many other nonsensical completions might still have higher probabilities than the correct answer. Finetuning on the marginal distribution of good responses $y$ seems to be enough to lift these “correct” answers above the noise floor, making them the most likely sequences to be decoded.

Minimal PyTorch Loop

The implementation is refreshingly simple. Here’s a conceptual loop using HuggingFace:

# Simplified response‑tuning loop using HuggingFace

from transformers import AutoModelForCausalLM, AutoTokenizer

from datasets import load_dataset

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-hf")

tok = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-hf")

tok.pad_token = tok.eos_token

dset = load_dataset("json", data_files="lima_responses.jsonl")

def collate(batch):

txt = [tok.bos_token + ex["response"] + tok.eos_token for ex in batch]

out = tok(txt, padding=True, return_tensors="pt")

out["labels"] = out["input_ids"].clone()

return out

trainer = transformers.Trainer(

model=model,

args=transformers.TrainingArguments("resp_tuned", per_device_train_batch_size=8, learning_rate=1e-5, num_train_epochs=10),

train_dataset=dset["train"],

data_collator=collate,

)

trainer.train()(The complete, runnable scripts are available in the implicit‑ins GitHub repo.)

2 – Single‑Task Finetuning

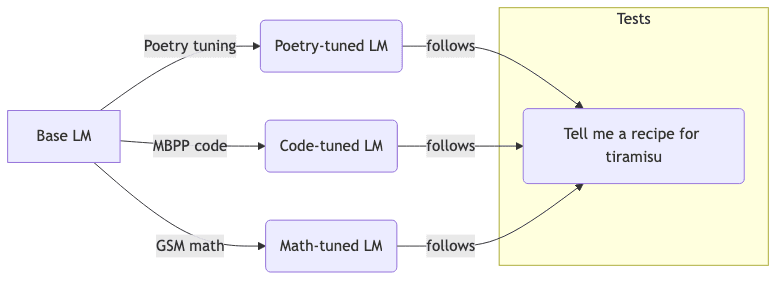

Even more counter-intuitively, the effect holds when the training data is not just responses, but responses from a single, unrelated domain. Models trained to produce only poems or only Python code still manage to answer arbitrary instructions sensibly.

The quantitative results show a clear, if weaker, generalization:

| Finetuning Task | Llama‑2‑7B Win‑rate |

|---|---|

| Math (GSM) | 23.7 % |

| Poetry | 22.9 % |

| Code (MBPP) | 16.9 % |

| Recipes | 14.6 % |

| Chess | 2.1 % |

Only the chess model fails to generalize. The likely culprit is the rigid, near-deterministic structure of Portable Game Notation (PGN) files. Every PGN begins with the same boilerplate headers, offering almost zero lexical diversity for the model to latch onto as a general response style.

Behavioural Drift

The models aren’t perfect generalists. A fascinating behavioral drift emerges: when a prompt resembles the finetuning domain, the model’s style regresses. A math-related question posed to the GSM-tuned model might yield an answer that ends with the characteristic #### 72 format. Ask it about history, however, and it behaves like a standard chatbot. The specialized training creates a gravitational pull, but one that can be escaped with sufficient contextual distance.

3 – A Three‑Rule Adapter

Could an even simpler, non-training-based intervention elicit instruction following? The answer is yes. The authors compose the base model’s probability distribution $p_{base}$ with a tiny, hand-coded “rules” model $p_{rules}$ using a product-of-experts approach:

This adapter simply nudges the token probabilities at each step based on three common-sense heuristics.

The Rules

- Shorter is better: Linearly boost the probability of the EOS (end-of-sequence) token as the response gets longer. This encourages conciseness.

- Discourage repetition: Apply a penalty to any token that has already appeared in the generated response.

- Mute unhelpful tokens: Down-weight a small, hand-picked list of 15 frequent but typically unhelpful tokens (e.g.,

I,should,<, etc.).

This simple adapter, implemented in a mere ~70 lines of Python, elevates Llama-2-7B’s AlpacaEval win-rate from a baseline of 2.4% to an impressive 24.4%. It turns a raw completion engine into a passable chatbot with almost no effort.

Practical Implications

The findings here aren’t just academic; they have direct consequences for how we should be building and evaluating models.

- Safety First: The most immediate, and perhaps sobering, implication is for safety. We can no longer assume that a model fine-tuned on a narrow, seemingly innocuous domain-like poetry or chess moves-is sterile to general-purpose queries. Restricting the training set is not a reliable containment strategy. Any finetuned model should be evaluated as a potential generalist.

- Cheaper Alignment: Response-tuning presents an intriguing, low-cost pathway for pre-alignment. If training on 1,000 answers gets you over 40% of the way to a fully instruction-tuned model, it could become a valuable first step in a more complex alignment workflow, saving time and compute.

- Guarding Task-Specific Models: If you truly require a model that performs only a single task, relying on the training data alone is insufficient. You must implement explicit guards during inference, such as output templates, constrained decoding, or other content filtering mechanisms.

Reproducing the Results

The authors have made their work exceptionally easy to reproduce. Simply clone the repository and use the provided Make targets.

# 1. Setup the environment

conda env create -f environment.yml

conda activate implicit-ins

# 2. Run response tuning on Llama‑2‑7B

make train_resp MODEL=llama2-7b DATA=lima LR=1e-5 EPOCHS=10

# 3. Evaluate the resulting model

make eval MODEL=resp_tuned_llama2-7bThe repository is built on the excellent Open-Instruct toolkit and comes with AlpacaEval integration out of the box, making replication straightforward.

Closing Thoughts

These findings are a potent reminder that pretraining is not about memorizing the web but baking in a latent, structural understanding of intent and communication patterns. When we finetune, we aren’t just teaching a new skill from scratch. We are carving a channel for a capability that was already there, dormant, waiting for a path to the surface.

Models don’t just learn what we show them; they reveal what they already knew. The ghost, it turns out, was always in the machine. Going forward, I’ll be treating my own domain-specific models with a new level of caution, evaluating them as if they could wake up at any moment and decide to be a general chatbot. If you run similar experiments, be prepared-what you find might just surprise you.