Key Takeaways

- Universal Chaotic Forecaster – Panda is the first pretrained foundation model that can zero-shot forecast unseen chaotic systems, from ODEs to PDEs.

- Synthetic-First Philosophy – The authors algorithmically evolve 20,000 novel chaotic systems, creating a vast training ground without touching messy real-world data.

- Dynamics-Aware Architecture – A patched Transformer, augmented with physics-informed embeddings and interleaved channel attention, outmatches larger, more generic time-series models.

- Emergent Capability – Trained only on low-dimensional ODEs, Panda generalizes to turbulent fluid flows and complex electronic circuits, demonstrating a true phase change in capability.

- A New Scaling Law – Forecast error decays as a power law in the diversity of training systems, not just the raw number of data points, pointing to new scaling principles for Scientific ML.

Introduction

The dance between chaos and order is a fundamental tension in the universe. Forecasting chaotic systems feels like wrestling with entropy itself-small errors don’t just accumulate, they explode. For years, the scientific ML playbook has been bifurcated: either bespoke, hand-crafted models for a single system, or gargantuan Transformers treating time-series data as just another sequence to be memorized.

Panda slices through this dichotomy. It’s not another blunt instrument. It’s a scalpel, designed with the conviction that you can teach a model the very grammar of dynamics.

Why Another Forecast Model?

The core brutality of chaos is baked into its definition: Your model isn’t just fighting drift; it’s fighting exponential divergence. This is why pattern-matching, the bread and butter of most sequence models, is doomed. You cannot memorize chaos. You must learn the propagator-the underlying operator that evolves the system state forward in time while respecting its invariants.

The Panda authors wagered that embedding physical priors directly into the architecture-Takens’s embedding theorem, the reality of cross-channel coupling, the assumption of continuity-wasn’t just a nice-to-have. It was the only path to genuine generalization. The results vindicate their thesis.

Dataset: Evolving 20,000 Strange Attractors

Instead of scraping the messy, noisy data of the real world, the authors became digital gods. They forged a universe of synthetic chaos. The process is a form of algorithmic evolution:

- Genesis – Start with a pantheon of 135 classic chaotic ODEs (Lorenz, Rössler, etc.).

- Mutation – Jitter their parameters with Gaussian noise, exploring the adjacent possible.

- Recombination – Force parent systems to couple via skew-products, creating novel, hybrid dynamics.

- Survival of the Chaotic – A brutal selection process, using fast chaos estimators, discards anything that collapses into boring stability.

This pipeline didn’t just generate data; it generated knowledge. The result is a library of ~20,000 unique chaotic worlds, and a crucial insight: the model’s performance scales not with the sheer volume of data points, but with the diversity of the dynamics it has witnessed. A new neural scaling law for the sciences.

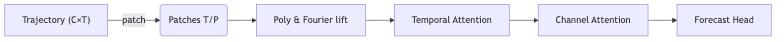

Architecture Highlights

The architecture is an exercise in applied philosophy. It’s not just another Transformer. It’s a Transformer that has read the physics books.

| Component | Purpose |

|---|---|

| PatchTST backbone | Enforces local continuity, reduces sequence length |

| Polynomial / RFF features | Approximate a Koopman lift for linear evolution |

| Channel attention | Captures deterministic cross-variable coupling |

| Masked-patch pre-training | Teaches smooth completion & continuity |

The encoder-only design is a deliberate choice, sidestepping the autoregressive trap where a model begins “parroting” its own recent outputs and drifting into fantasy. Panda is forced to reason from the initial state and not merely echo itself.

Results Overview

Zero-Shot on Unseen Systems

- Outmatches Chronos-SFT (a model 10x its size) by ~30% sMAPE on 9,300 unseen synthetic systems.

- Maintains its edge even when rolled out autoregressively 8x past its training horizon.

The Real-World Gauntlet

- Double pendulum from optical tracking data.

- C. elegans posture dynamics (eigen-worms).

- 28-node electronic oscillator network – where its advantage grows with coupling strength, proving the value of its channel-aware design.

The Emergent Leap: Partial Differential Equations

This is the kicker. Without ever seeing a PDE during training, Panda forecasts the Kuramoto-Sivashinsky flame front and the von Kármán vortex street better than specialized PDE surrogate models. It suggests the model learned something more fundamental than the ODEs it was fed; it learned a piece of the language of fluid dynamics.

Interpreting Panda’s Inner Workings

Cracking open the black box reveals not statistical tea leaves, but something startlingly like physics. Attention rollout analyses show the model developing non-linear resonance grids reminiscent of bispectra from turbulence studies. Cross-channel attention maps reveal how it implicitly learns spatio-temporal coupling.

Is it just curve-fitting? Maybe. But it’s building an internal representation of the system’s physics. It’s discovering the mechanism rather than (just) memorizing the phenomenon.

Hands-On: Running Panda in Your Lab

The authors, to their credit, have not kept this locked in a lab. You can pull it down and run it yourself.

import torch

from torch import nn

from panda.model import PandaForecast

# Load HF weights (requires >PyTorch 2.1)

model = PandaForecast.from_pretrained("GilpinLab/panda").eval()

# Example: forecast Lorenz attractor

context = torch.randn(1, 3, 512) # replace with real data

with torch.no_grad():

pred = model(context) # shape (1, 3, 128)

print(pred.shape)Model weights & training scripts are BSD-3-licensed on GitHub and mirrored on Hugging Face.

Strengths and Limitations

No model is a panacea. Panda’s architecture presents a clear set of trade-offs, outlining the map for future work.

| 👍 Strength | ⚠️ Limitation |

|---|---|

| Emergent PDE skill without direct supervision | Trained only on 3D ODEs; scaling to high-dim channels remains open |

| Compact (20M) yet outperforms much larger baselines | MLM pre-training slightly harms long-term rollout stability |

| Fully synthetic data avoids privacy & scarcity issues | Real-world noise and non-stationarity are not covered in training |

Comparison to Contemporary Work

The distinction between Panda and its contemporaries is one of philosophy. Models like Chronos and TimesFM treat multivariate time-series as a bag of independent sequences, ignoring the deterministic coupling that is the very soul of a physical system. Panda’s channel attention makes this coupling a first-class citizen-a decisive architectural advantage.

Meanwhile, earlier continuous-time models like Neural ODEs, while elegant, often lose their nerve in the face of long-term chaotic integration. By framing the problem as sequence-to-sequence, Panda gains a brute-force robustness that continuous models lack.

Outlook

Panda isn’t an endpoint; it’s a foundation model in the truest sense-a base camp for the next ascent in scientific AI. Here’s what I’ll be watching for:

- Scaling to the Mesoscale: Can sparse channel attention let this scale to the thousands of variables needed for climate or neuroscience?

- Imbuing Physical Law: The next step is to bake in hard constraints like energy conservation via symplectic or Hamiltonian inductive biases.

- From Observer to Actor: The most exciting frontier is using Panda’s embeddings for control and planning. Imagine a decision transformer that can navigate, and even tame, chaotic regimes.

The project is active, and the authors are hinting at larger models. This space is heating up.

Conclusion

Panda is a powerful demonstration that to understand the world, you don’t always need more data from it; sometimes, you need better abstractions. By combining a universe of synthetic chaos with the hard-won priors of physics, the model achieves a level of generalization that was, until recently, thought to be the exclusive domain of bespoke, system-specific models.

For anyone wrestling with the messy, unpredictable dynamics of the real world, this is more than just another paper. It’s a new tool, and a new way of thinking. The repository is worth your time.