Key Takeaways

- Adapters from Prose, Not Process: Text‑to‑LoRA (T2L) generates a functional LoRA adapter from a single natural-language command, collapsing the entire fine-tuning workflow into a single forward pass.

- A Library in a Pinhead: It distills the knowledge of hundreds of task-specific LoRAs into a single, compact hypernetwork, vaporizing the storage and management overhead of maintaining an adapter fleet.

- Plausible Zero‑Shot Generalisation: On unseen benchmarks, T2L closes a significant portion of the performance gap to bespoke fine-tunes, while consuming over 4x fewer FLOPs than brute-force prompt engineering.

- Open and Actionable: Sakana AI has released the code, weights, and even a web UI, dropping the barrier to experimentation to virtually zero.

Why Do We Still Need Adapters in 2025?

Large language models possess staggering generalist capabilities, yet anyone shipping them at scale knows the familiar friction of specialization:

- The Data Grind: Collecting, cleaning, and balancing a new dataset for every bespoke task.

- The GPU Tax: Even parameter-efficient methods like LoRA fine-tuning chew through expensive compute cycles and, more importantly, developer patience.

- The Management Headache: A proliferating fleet of per-task adapters that are difficult to manage, compose, and deploy efficiently.

T2L offers a compelling answer: treat text as the interface, a compact hypernetwork as the compiler, and the resulting LoRA matrices as the transient, disposable executable.

How Does Text‑to‑LoRA Work?

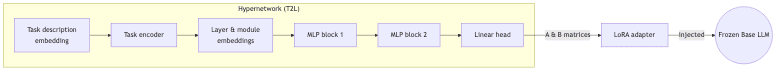

- Semantic Fingerprint: T2L ingests a sentence-level embedding (from a model like GTE-large or a Mistral CLS token) that captures the semantic essence of the task description.

- Learned Conditionals: The hypernetwork doesn’t just take in the task description; it also receives learned embeddings for the module type (e.g.,

q_proj,v_proj) and layer depth. This allows a single, lean network to intelligently generate the correct weight adjustments for every targeted layer in the base LLM. - Head Architecture: The paper explores three model sizes (L/M/S) that make different trade-offs between parameter count and expressive power. The final linear head can be configured to predict the full A and B matrices, share one and predict the other, or predict just a single, shared rank slice.

- Training Regimens:

- Reconstruction: The fast path. Distill a pre-existing library of LoRA adapters. This is quick but yields weaker generalization.

- Supervised Fine-Tuning (SFT): The high-road. Train end-to-end across a diverse corpus of 479 tasks from Super-Natural-Instructions. It’s slower, but the resulting quality and zero-shot capability are in another league.

Results at a Glance

| Setting | Avg. score (10 benchmarks) | Compute overhead |

|---|---|---|

| Base Mistral‑7B | 55.8 | – |

| + 3‑shot ICL | 61.0 | 4.2 TFLOPs |

| + Multi‑task LoRA | 66.3 | 0.83 TFLOPs |

| + T2L‑M (zero‑shot) | 67.5 | 0.86 TFLOPs |

The numbers back up the elegance. T2L not only keeps pace with strong multi-task LoRA baselines but surpasses them, matching several task-specific adapters while completely eliminating the token overhead of in-context learning.

Hands‑On: Spinning Up T2L Locally

# 1. Clone and install

$ git clone https://github.com/SakanaAI/text-to-lora && cd text-to-lora

$ uv self update && uv venv --python 3.10 --seed && uv sync

# 2. Fetch model checkpoints (≈2 GB)

$ uv run huggingface-cli login

$ uv run huggingface-cli download SakanaAI/text-to-lora --local-dir . --include "trained_t2l/*"

# 3. Launch the web UI (needs 16 GB VRAM)

$ uv run python webui/app.pyFor programmatic access, the core logic is beautifully minimal:

from t2l import load_t2l, generate_lora, apply_lora

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load the hypernetwork (just 34M parameters for the Medium size)

t2l = load_t2l("trained_t2l/t2l-M")

base_model = AutoModelForCausalLM.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

# Describe the task in plain English

description = "Answer grade-school math word problems step by step."

# Generate and apply the LoRA adapter. No gradients, no training loop.

lora_A, lora_B = generate_lora(t2l, description)

adapted_model = apply_lora(base_model, lora_A, lora_B)

# Run inference with the dynamically adapted model

prompt = "If Alice has twice as many apples as Bob and Bob has 5, how many..."

inputs = tokenizer(prompt, return_tensors="pt")

print(adapted_model.generate(**inputs))Strengths I Appreciate

- Instant Gratification, Almost: Generating an adapter is orders of magnitude cheaper than a fresh fine-tune-milliseconds versus minutes. The friction of specialization practically disappears.

- Radical Compression: A single hypernetwork plus a lightweight text embedding replaces what could be hundreds of individual, multi-megabyte LoRA files.

- Intent as an API: The ability to steer model behavior by simply rephrasing the description-the paper shows toggling between deterministic and chain-of-thought reasoning-is a powerful form of high-level control.

- Architectural Agnosticism: The same hyper-parameters and training setup work across Mistral 7B, Llama 3 8B, and Gemma 2B, suggesting the approach is robust and not brittle to a specific model’s quirks.

Limitations & Open Questions

- The Oracle Problem: The quality of the generated adapter is acutely sensitive to the input description. Vague or misaligned prompts cause performance to plummet. Who becomes the master author of these magical incantations?

- Out-of-Distribution Frontiers: How does performance hold up on tasks fundamentally different from the Super-Natural-Instructions corpus, like long-form creative writing or complex agentic workflows? The map here is still largely blank.

- The Adversarial Prompt: If language can generate weights, it can also become an attack vector. What happens when a malicious prompt generates a hostile adapter designed to leak data or produce harmful output? Security models for this paradigm need to be built.

- Beyond LoRA: The core idea feels bigger than LoRA. Could hypernetworks learn to generate other PEFT formats like IA³ or BitFit? Could they modulate activations directly, bypassing weight modification entirely?

My Take

Text-to-LoRA feels like a fundamental upgrade to our interaction model with LLMs. For years, prompt engineering has been the dominant method of specialization-a high-bandwidth but ultimately clunky approach where we stuff examples and instructions into a model’s finite context window, hoping it gets the message.

T2L proposes something far more elegant. Instead of shouting instructions at the model, we whisper a command to a compiler that performs surgical neuromodulation, patching the network’s weights for the specific task at hand. It’s fast, clean, and sidesteps an entire layer of deployment complexity.

The path from here to robust production systems will require developing richer description-authoring workflows and thoughtful security guardrails. But the core insight-that natural language itself can be the source code for targeted model adaptation-opens thrilling new avenues for creating truly dynamic and responsive AI.

If you’re tinkering with LoRAs today, this is not one to ignore. Grab the repo and see just how far a single sentence can take your model.

Further reading: LoRA (Hu et al., 2021), HyperNetworks (Ha et al., 2016), Sakana AI announcement.