Key Take‑aways

- Best‑of‑N (BoN) is a brutally simple, fully black‑box algorithm that reliably cracks state‑of‑the‑art language, vision, and audio models by sheer, brute‑force diversification of a user’s request.

- With 10,000 augmented samples, BoN achieves an 89% Attack Success Rate (ASR) on GPT‑4o and 78% on Claude 3.5 Sonnet. It also punches through open‑source defenses like Circuit Breakers.

- The attack’s effectiveness scales with compute: success follows a predictable power‑law in the number of samples, allowing attackers to directly trade cash for compromise.

- Multimodal by design: trivial perturbations-changing a font, a color, audio pitch, or tempo-transfer the core idea to images and audio, yielding a 56% ASR on GPT‑4o‑Vision and 72% on GPT‑4o Realtime Audio.

- Reliability per successful prompt is low (~20% on replay), and BoN does not discover universal “magic” prompts. But when composed with prefix attacks like Many‑Shot‑Jailbreaking, it becomes both cheaper (28x sample efficiency) and far more potent.

- A public GitHub repo makes experimentation trivial, underscoring the urgency for stronger, modality‑aware safeguards that anticipate this class of attack.

Introduction

In security engineering, there’s an old, uncomfortable truth: attackers pick the time and the terrain. Best‑of‑N (BoN) jailbreaking epitomizes that insight for the AI frontier. While model providers erect ever more sophisticated, multi-million-dollar policy layers and guardrails, John Hughes et al. demonstrate that a simple glass hammer-randomly perturb the input until the model cracks-is often all that’s needed. I spent the last week replicating the paper, spelunking through the authors’ project site and code, and running my own small‑scale red‑team. This is a distillation of what I learned.

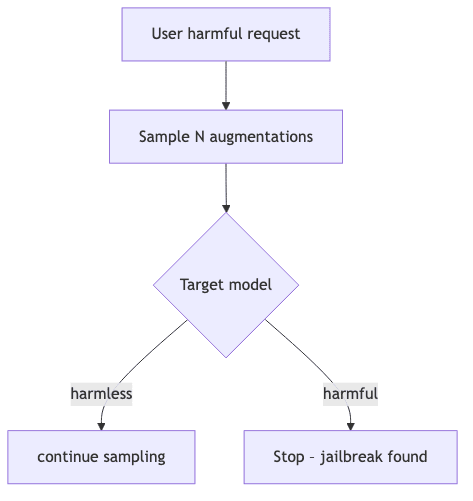

The Algorithm at a Glance

One Core Idea

BoN’s insight is variance injection: each augmentation nudges the prompt into a slightly different region of the model’s vast input manifold. Because alignment training only shrink‑wraps the most probable, high-traffic corridors of user intent, the outliers inevitably slip through the cracks. It’s a search algorithm that weaponizes randomness.

Augmentation Menus

| Modality | Example Perturbations |

|---|---|

| Text | Character shuffling, random capitals, nearby‑ASCII substitutions |

| Vision | Varying font, color, background blocks, position, image size |

| Audio | ±speed, ±pitch, background noise/speech/music, volume |

The implementation is little more than a handful of shell scripts (see experiments/1a_run_text_bon.sh), a testament to the attack’s raw simplicity.

Empirical Findings

1. Success is Cheap

With just 100 samples, BoN hits a 50% ASR on GPT‑4o for a total cost of around $9. This places it squarely within reach of any adversary with a hobby‑level budget. At 10,000 samples, GPT‑4o, Claude, and Gemini all cross the 50% threshold, proving this isn’t an anomaly but a systemic weakness.

2. Power‑Law Scaling

Plotting the failure rate (−log(ASR)) against the number of attempts (log(N)) reveals a near‑linear trend. The physics of the attack are predictable. Extrapolating from the first 1,000 attempts predicts 10,000‑step performance with surprising accuracy (±5%). This is gold for defenders: we can now forecast future risk and budget for security without burning endless API quota.

3. Multimodal Generality

The same algorithm jumps between modalities simply by swapping out the augmentation function. For images, the team rendered text in absurd fonts over psychedelic backgrounds. For audio, they re‑synthesized requests with random audio effects. No gradients, no log‑probs, no insider access. Just noise.

4. Composition Creates Potency

Prepending a known Many‑Shot Jailbreaking (MSJ) prefix before running BoN reduces the required samples from 6,000 to just 218 on Claude Sonnet-a 28× gain in efficiency. The takeaway is chilling: attackers can first scour GitHub for known attack prefixes, then drizzle BoN on top to finish the job.

Strengths & Weaknesses

| 💪 Strengths | 😬 Weaknesses |

|---|---|

| Black‑box: requires zero model internals. | Brittleness: a replayed augmentation only succeeds ~20% of the time. |

| Compute‑scalable: trivial to parallelize. | Detectable: high request volume & bizarre formatting are strong signals. |

| Cross‑modal: exploits systemic input-space blind spots. | Classifier dependence: authors used GPT‑4o to grade harmfulness; false negatives inflate ASR. |

How Does It Compare?

Most prior jailbreaks fall into two camps:

- Gradient/heuristic optimization (e.g., PAIR, AutoDAN): often white‑box or transfer‑based, requiring some model knowledge.

- Prompt engineering (e.g., DAN, MSJ): clever, but manually curated and often quickly patched.

BoN sits elsewhere. It is brute‑force yet modality‑agnostic, a distinct class of attack that relies on scale over sophistication. It complements optimization‑based methods rather than replacing them; as the composition results prove, they are synergistically dangerous.

Defensive Takeaways

- Stochasticity is not safety. A decoder’s randomness can be a vulnerability, leaking harmful completions even when the mode of the distribution is a polite refusal.

- Verifier ensembles must watch for families of similar inputs, not just discrete, independent calls.

- Rate‑limit by semantic distance. If a user issues 10,000 near‑duplicate queries in 30 seconds, the system should throttle them, regardless of superficial formatting changes.

- Augment all the things. Adversarial training must include BoN‑style noise during fine‑tuning across every modality.

Personal Reflections

As an engineer, I can’t help but admire the raw elegance: three lines of augmentation code and a while loop are enough to dismantle safety systems that cost millions to develop. As a practitioner, I worry. The attack is already open‑sourced, complete with Bash scripts and notebooks, effectively democratizing a powerful exploit.

The broader lesson here is that alignment must dominate the entire local neighborhood of a request, not just its centroid. The surface area for attack is immense. We’ve built cathedrals of intelligence on foundations that are vulnerable to something as simple as a typo. Data augmentation and robust verification, long staples in computer vision security, are now non‑negotiable table stakes for language and multimodal AI.

Where to Next?

- Adaptive Augmentation Search: Why sample randomly? Swapping brute‑force sampling for evolutionary strategies or CMA‑ES could cut costs and discover adversarial pockets more efficiently.

- Verifier Robustness: How do imperfect, biased, or even adversarial classifiers skew our estimates of ASR? We need to study the failure modes of our measurement tools.

- Real‑Time Defense Benchmarks: We need to expose safety layers to continuous BoN pressure and publish live dashboards of their resilience. Security through obscurity is dead.

Code Snippet – Running Text BoN

# assumes `OPENAI_API_KEY` env var set

python run_text_bon.py \

--model gpt-4o \

--requests harmbench/standard.txt \

--n_samples 1000 \

--temperature 1 \

--out results/gpt4o_bon.jsonConclusion

Best‑of‑N Jailbreaking is a stark wake‑up call: simplicity plus scale beats complexity plus complacency. The paper is refreshingly transparent, the code is battle‑ready, and the implications ripple across every modality we care about. As we harden the models of tomorrow, BoN must become a standard part of the red‑teaming toolkit. Because if your billion‑dollar defense can’t handle a few random capital letters, it certainly can’t handle a determined adversary.